A news story broke in the last week about an image shown of a politician in Australia, shown in a still as part of a journalist introducing a story. The story here is irrelevant, it is the image used to start this:

Now it turns out the image of Ms Purcell (the politician in the photo above and below) was extended using Adobe Photoshop and “Generative Fill”, using a smaller image than what was on the right above:

The Australian Broadcasting Corporation (ABC) has a story on this which is worth a read.

They look at it as ethics. And Ms Purcell called out sexism in social media. All of which may or may not be true. Adobe has naturally defended their tool, which seems reasonable to me.

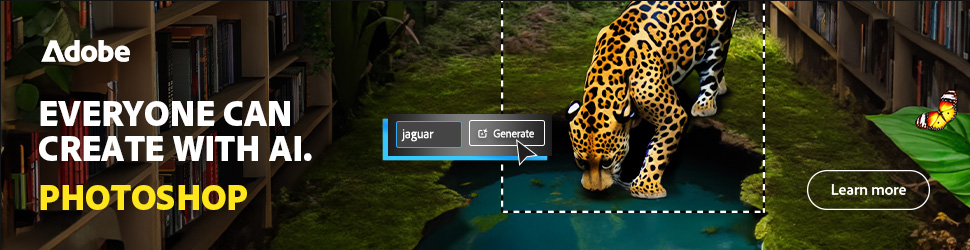

Indeed, the appeal of Generative AI tooling is clear from Adobe’s own advertising:

The fact remains that the generative fill tool (and perhaps the digital artist using the tool) and the editor felt that this did not change the story but did fill the purpose of the lead into their piece.

However, I want to raise the question of what impact the change made to the viewer, who saw this image, and perhaps jumped to some conclusions based upon what they saw. Pretty quickly you’re coming to the realisation that the images you see from a news media organisation is no 100% factual. It is longer news, but entertainment. This small edit, may alter the viewers perception and pre-disposition to a topic.

People have historically trusted news organisations to show us facts, and inform us. We have inherently taken Social media as being somewhat less trusted. We hear about journalistic integrity. And while this is minor, its that it has been detected, highlighted, and confirmed that is slightly alarming.

DeepFake voice and video has been around for some time now, and we always want to ensure that data we reply upon come from credible sources. Perhaps the use of Generative AI in news media, newspapers, should be frowned upon or forbidden.

Media (entertainment) organisations that wish to sway the political discourse may of course be doing much more of this. Those are the media outlets with a less than stellar reputation, where the astute consumer will understand that what’s presented may be a version of the truth that is enhanced for various purposes.

If it is for satire, then that’s fine (if the platform or source is understood to be satirical).

If it is to undermine society and influence elections and politics, and impact society for personal gain, that’s not what I would like in my society.

Perhaps this is an innocent mistake, with no malice or forethought about how such content fill may change perceptions.

Perhaps some training for journalists (and their supporting image editors), and a statement from these organisation on their use of generated content?