30 years ago, in 1994, I started studying at The University of Western Australia (UWA). At the start of the semester, an Orientation Day (O-Day) is held, where the various student clubs would try and recruit members.

Starting as a Computer Science student, I gravitated towards the University Computer Club stand, and signed up to become a member for a few dollars. Founded in 1974, it pre-dates and outlasts the famed Homebrew Computer Club (1975 – 1986), and even pre-dates the UWA Department of Computer Science.

This year the club turns 50, and a dinner was held.

Around 90 past and present members met for dinner at The UWA University Club function centre to reminisce, celebrate, and look forward to the future.

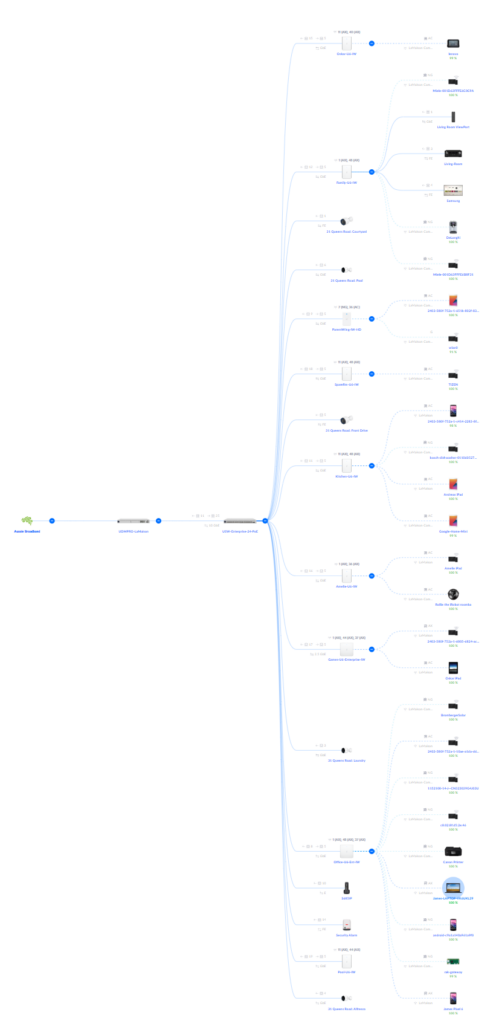

One key element to the ongoing success of a student club is having a space to congregate and to store equipment. Without a physical space that can be the club, it becomes very ephemeral, and often organisations disappear. The UWA Student Gild has supported the UCC with space for most of the 50 year history, and since the early 1990s, this has been a large space in the loft of Cameron Hall.

In the above, you can see a green roof space on the left hand side: this is the UCC Machine Room. A few of us built this space around 1996 in order to house some of the servers that we had acquired, and to duct the air-conditioning (hanging from a window) to keep them cool. Nearly 30 years later, this structure is still standing, make from wood purchased from Bunnings, and a pair of frosted glass doors acquired from a recycling center in Bayswater.

On the shelves you can see manuals – lots of them, for things like BeOS, NextStep, various programming languages, Sun hardware, IBM hardware, On the shelfs is various hardware, cables, connectors and adaptors. On the tables are terminals, 3d printers, soldering irons, disk packs, tape reels, half built robot brains — spanning decades of technology changes. Posters from events past and present adorn surfaces, encouraging participation in activities, experimentation in software and hardware, and more.

One thing clear from the pictures shown is the impact technology ha had on our society. In 1994 I had a digital camera, a Kodak DC40. I took, stored and retained many photos, straight to digital, when most people were still using film (and taking that film to their local pharmacist/chemist to process/print???!). Today, everyone uses digital photography, mostly form their phones. Its normalised, ubiquitous, and the incremental cost for an image is practically zero (just the storage costs of the data produced). The quality is good today compared with 30 years ago.

Dr Williams (above) was one of the first in the world to put a CCD camera on the end of a telescope at the WA Observatory to record images, leading to many observations that would have historically been missed (not to mention, the flexibility to be one of the first astronomers to be working from home on cold nights).

Along one wall is a framed colour picture, taken by a West Australian newspaper photographer around 1997 or so. It shows a series of old IBM 360 cabinets – parts of a large mainframe computer, that was being disposed of from the UWA storage facility in the suburb of Shenton Park. Many old computers had names; this one was called Ben. It had been donated to the UCC well before my arrival, but now the time had come that this storage facility was being repurposed, and Ben had to go. Luckily it was being donated to a museum collector, and over the years I believe it made its way to the Living Computer Museum in Seattle.

But sitting on the wall of the UCC for the last 30 years has been my picture. Watching thousands of UCCans arrive fresh faced, and seen them learn, connect and evolve into some of the individuals who have powered organisations like Apple, Google, Amazon, Shell, BHP, Rio Tinto, The Square Kilometer Array, and many more.

I served as UCC President in 1996, and I helped organise the UCC’s 18th anniversary, 21st, and 25th. Now at 50, its clear that having the physical space to meet – and eat pizza, discuss news, share tips and skills – has been a key part of the longevity of the UCC.

Here’s to the next 50 years of the UCC!