I try and stay as up-to-date with all things Cloud, and have done for the better part of a decade and a bit. But I recently came across a social media post entitled “Is it safe to move to the cloud?“, and with this much experience, I had so many immediate thoughts, that this post thus precipitated.

My immediate reaction was “Is it safe to NOT move to The Cloud?“, but then I thought about the underlying problems with all digital solutions. And the key issue is understanding TCO, and ensuring the right cost is being endured over the operating time of the solution, rather than the least cost as is so typical.

The truth is that with digital systems, things change all the time. And if those systems are facing untrusted networks (such as the Internet), or processing untrusted data (such as came from humans) then there are issues lurking.

Let me take a moment to point out, as an example, any Java implementation that used the very popular Log4J library to handle error messages. Last December (2021) a serious vulnerability arose that meant that if you logged a certain message, then it would trigger an issue. Quite often error messages being raised include the offending input that failed validation or caused an exception, and thus, you could have untrusted data triggering a vulnerability via this (wildly popular and heavily used) library.

It’s not that anyone had done anything bad on purpose. No one had spotted it (and reported it to the developer of the library) earlier.

Of course, the correct thing happened: an updated version of this library was released. And then other vendors of solutions updated their products that included this newer version of the Log4J library. And then your operations team updated your deployment of this application.

Or did they.

There’s a phrase that fills me with fear in IT operations: “Transition to Support“. It indicates we’re punting the operational responsibility of the solution to a team that a did not build it, and do not now how to make major changes to the application. We’re sending to to a team that already look after other digital solutions, and adding one more thing to their work for them to check is operational, and for them to maintain — which, as they are often overwhelmed with multiple solutions, they do the simplest thing: check it is operational, not that it is Well Maintained.

Transition to support: the death knell for Well-Maintained systems

James Bromberger

I’ve seen first hand that critical enterprise systems, line-of-business processing that is the core of the business, is best served when the smart people who built it, stay to operate it in a DevOps approach. This team can make the major surgical changes that happen after deployment, and as business conditions and cyber threats change.

The concern here is cost. Development teams cost more than dumping large numbers of systems on under staffed Support teams. Or support gets sent offshore to external providers who may spend 30 seconds checking the system works, but no time investigating the error messages and their resolution that may require a software update.

It’s a question of cost.

A short-term CIO makes their hero status by cutting costs. Immediately this has only a positive impact on the balance sheet. But as time goes on, the risks of poor maintenance goes up. But after the financial year has ended, and short term EBITDA shows massive growth, and a heroes party is given for the CIO, they then miraculously depart for another job based on the short term success.

Next up, the original company finds that their digital solution needs to be updated, but there is no one who understands it to make such a change.

The smart people were let go of. They were seen as a cost, not part of the business.

So lets rephrase the question: “Is it safe to move to the cloud with your current IT management and maintenance approach?” Possibly not: you probably have to modify the way you do a lot of things, including how you structure your teams and Org Unit. You may need to up-weight training for teams who will now take on full responsibility for workloads, instead of just being “the network guy”. But this is an opportunity; those teams can now feel that THEY are the service team for a workload that supports something more substantial than just rack-and-stack of storage. Moving to separate DevOps teams per critical workload, you can then have them independently innovate – but collaborate on standards and improvements. a friendly competition on addressing technical debt, or number of user feature improvements requested – and satisfied.

So is it safe to move to the Cloud? It depends on who is doing, how much knowledge and experience they have, and what happens next in your operating model.

The Cloud is not just another data centre. And TCO isn’t just cloud costs, and it isn’t just people cost. Sometimes the cost is the compliance failure and fine you get by inadvertently removing the operating model that would have prevented a data breach.

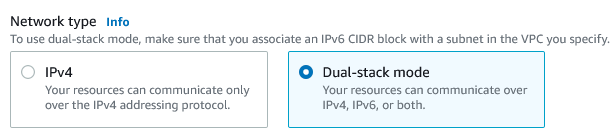

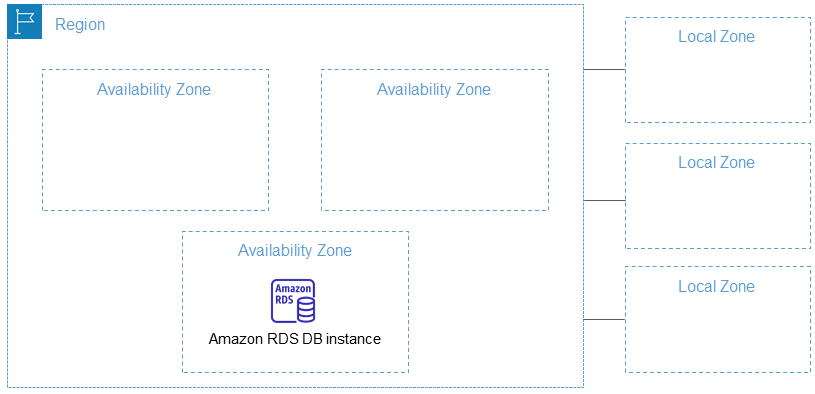

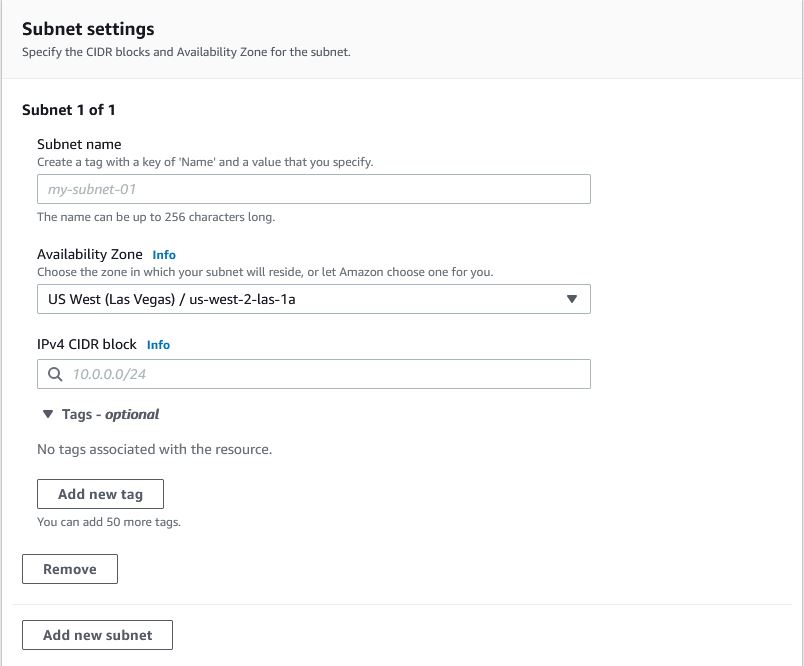

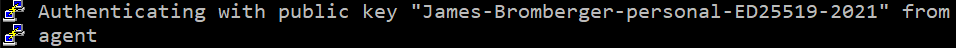

Its been 7 years since I (and my colleagues at Ajilon/Modis, soon to be Akkodis) moved the Land Registry of Western Australia, the critical government registry of property ownership of the state, into the AWS Cloud for Landgate. We’ve kept a DevOps approach for the solution – ensuring it was not just Well-Architected, but Well Maintained. It’s a small DevOps crew now that ensure that Java Updates, 3rd party library updates and more get imported, but also maintenance of the Cloud environment such as load balancing, virtual machine types & images (AMIs) get updated, managed relational database versions get updated, newer TLS versions get supported and — more importantly — older versions get deprecated and disabled. FinOps, DevOps, and collaboration.