A few days ago, AWS announced Lambda support for their Code Build service.

Code build sits amongst a slew of Code* services, which developers can pick and chose from to get their jobs done. I’m going to concentrate on just three of them:

- Code Commit: a managed Git repository

- Code Build: a service to launch compute* to execute software compile/built/test services

- Code Pipeline: a CI/CD pipeline that helps orchestrate the pattern of build and release actions, across different environments

My common use case is for publishing (mostly) static web sites. Being a web developer since the early 1990s, I’m pretty comfortable with importing some web frameworks, writing some HTML and additional CSS, grabbing some images, and then publishing the content.

That content is typically deployed to an S3 Bucket, with CloudFront sitting in front of it, and Route53 doing its duty for DNS resolution… times two or three environments (dev, test, prod).

CodePipeline can be automatically kicked off when a developer pushes a commit to the repo. For several years I have used the native Code Pipeline service to deploy this artifact, but there’s always been a few niggles.

As a developer, I also like having some pre-commit hooks. I like to ensure my HTML is reasonable, that I haven’t put any credentials in my code, etc. For this I use the pre-commit hooks.

Here’s my “.pre-commit-config.yaml” file, that sits in the base of my content repo:

repos:

- repo: https://github.com/pre-commit/pre-commit-hooks

rev: v4.4.0

hooks:

- id: mixed-line-ending

- id: trailing-whitespace

- id: detect-aws-credentials

- id: detect-private-key

- repo: https://github.com/Lucas-C/pre-commit-hooks-nodejs

rev: v1.1.2

hooks:

- id: htmllintThere’s a few more “dot” files such as “.htmllintrc” that also get created and persisted to the repo, but here’s the catch: I want them there for the developers, but I want them purged when being published.

Using the original CodePipeline with the native S3 Deployer was simple, but didn’t give the opportunity to tidy up. That would require Code Build.

However, until this new announcement, using code build meant defining a whole EC2 instance (and VPC for it to live in) and waiting the 20 – 60 seconds for it to start before running your code. The time, and cost, wasn’t worth it in my opinion.

Until now, with Lambda.

I defined (and committed to the repo) a buildspec.yml file, and the commands in the build section show what I am tidying up:

version: 0.2

phases:

build:

commands:

- rm -f buildspec.yml .htmllintrc .pre-commit-config.yaml package-lock.json package.json

- rm -rf .gitYes, the buildspec.yml file is one of the files I don’t want to publish.

Time to change the Pipeline order, and include a Build stage that created a new output artifact based upon that. The above buildspec.yml file then has an additional section at the end:

artifacts:

files:

- '**/*'This the code Build job config, we define a new name for the output artifacts, int his case I called it “TidySource”.However there was an issue with the output artifact from this.

When CodeCommit triggers a build, it makes a single artifact available to the pipeline: the ZIP contents from the repo, in an S3 Bucket for the pipeline. The format of this object’s key (name) is:

s3://codepipeline-${REGION}-{$ID}/${PIPELINENAME}/SourceArti/${BUILDID}The original S3 Deployer in CodePipeline understood that, and gave you the option to decompress the object (zip file) when it put it in the configured destination bucket.

CodeBuild supports multiple artifacts, and its format for the output object defined from the buildspec is:

s3://codepipeline-${REGION}-{$ID}/${PIPELINENAME}/SourceArti/${BUILDID}/${CODEBUILDID}As such, S3 Deployer would then look for the object that should match the first syntax, and fails.

Hmmm.

OK, I had one more niggle about the s3 deployer: it doesn’t tidy up. If you delete something from your repo, the S3 deploy does not delete from the deployment location – it just unpacks over the top, leaving previously deployed files in place.

So my last change was to ditch both the output artifact from code build, and the original S3 deployer itself, and use the trusty aws s3 sync command, and a few variables in the code pipeline:

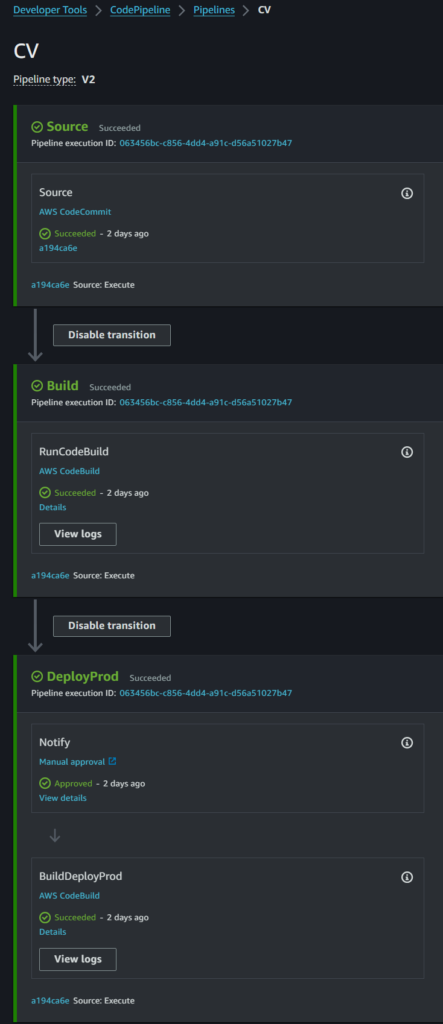

- aws s3 --region $AWS_REGION sync . s3://${S3DeployBucket}/${S3DeployPrefix}/ --deleteSo the pipeline now looks like:

You can view the resulting web site at https://cv.jamesbromberger.com/. If you read the footnotes here it will tell you about some of the pipeline. Now I have a new place to play in – automating some of the framework management via NPM during the build phase, and running a few sed commands to update resulting paths in HTML content.

But my big wins are:

- You can’t hit https://cv.jamesbromberger.com/.htmllintrc any more (not that there was anything secure in there, but I like to be… tidy)

- Older versions of frameworks (bootstrap) are no longer lying around in

/assets/bootstrap-${version}/. - Its not costing me more timer or money when doing this tidy up, thanks to Lambda.