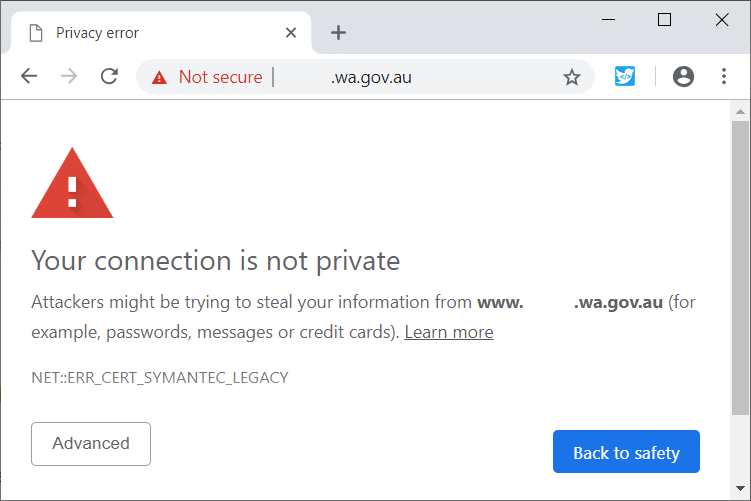

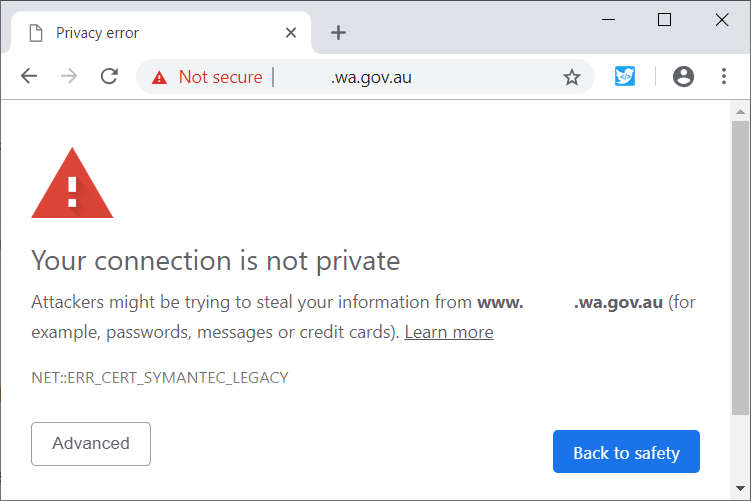

Sometime in the next week, a large swathe of web sites around the world, from Fortune 500 companies, to governments and beyond will stop being available securely. All with the next release version of Google Chrome (version 70) and Firefox. The words “NET::ERR_CERT_SYMANTEC_LEGACY” are about to become well known.

For those wishing to look into the future, Google Chrome makes its future releases available to those interested under the labels of Beta, and prior to that, as a “Canary” (ie, in the coal mine). And if you’d cast your eyes over a few sites with these pre-release versions, you’ll see examples like that shown here (name removed).

Sadly, the operators of these sites may well be looking at the embedded certificate expiry (Valid Until) date, and think this is not an issue for them. Some of these certificates may have many more years of appearing to be valid.

The case is much worse: the organisation that these web site operators obtained the certificate from — the Certificate Authority — is about to have its status revoked, having been caught acting in ways that undermine the trust instilled in it. These are all powered by Symantec’s legacy root certificates, which includes the Thawte, GeoTrust, and RapidSSL brands.

You can read plenty online about this, for example: form DigiCert, Mozilla, and Google. Here’s Scott Helm’s February 2018 post, and his follow up from a recent Alexa Top sites crawl. Several of these have since updated their certificates (Well done, Tigereair.com.au, stratco.com.au, naati.com.au; fixed it!).

So what’s actually going to happen?

Some disruption.

I’m sure a large number of these will be smeared across mainstream media for being “hacked”, or “offline”, when in reality, “oblivious” is closer to the point.

Poor service providers are going to tell vulnerable people to “ignore the security warnings”, and to “proceed” to the site regardless. This is BAD advise. If you are told this, you are better off ceasing to do business with the organisation as they do not under stand the security they are dealing with. If this is the advice of your employer, then you should consider what this means to the security of your personal HR (and other) data.

There’s far too many people operating, controlling, or otherwise “responsible” for large numbers of web sites who have no idea about what they are actually operating. It’s evident from scanning site and seeing those that still have legacy, vulnerable encryption on their HTTPS configuration, or worse, serve content over unencrypted HTTP. Just because you don’t value you’re content from modification, doesn’t mean your web visitors don’t value NOT being compromised when visiting you.

Web traffic interception happens every second of every day. In Wifi Cafes, Airports, air planes, corporate LANs. TLS (formerly SSL) is the best way we have to protect the integrity of the content across untrusted networks, but we’re in a constant capability race to ensure that services only offer ways to connect that minimise the risk of using untrusted networks.

Driven by a desire to not change things that appear to be working (or indeed, being either lazy, overworked, under resourced/funded, or unaware), organisations are not bringing up their drawbridge of security on their most vulnerable interfaces: those services that are facing the Internet, such as their web site or web services. This issue, when it breaks, will help highlight that some organisations and individuals should probably not be in charge of the services they currently operate.

Case in point: check out BankGradeSecurity.com, a ranking of financial institutions around the world and how well they have adopted modern encryption and security capabilities on their web site and Internet banking services.

It’s clear we’re constantly in the middle of technology transitions – IT Services are not simply done; they are either in-use and actively well-maintained, or they should be archived or removed. Anything else demonstrates cost cutting and under-valuation of the digital capability that allows an organisation to operate.

Organisations face a choice of two types of Managed Services providers today: those that understand service maintenance on behalf of their customers and those that do not (and are still running with the same HTTPS configuration they went live with years ago.

It’s easy to spot these services — they haven’t enabled GCM based AES block ciphers or Eliptical Curve Diffie-Hellman Ephemeral (ECDHE) key exchange mechanisms. Worse, those permitting the use of SSLv2, SSLv3, or TLS 1.0, or not yet permitting the use of TLS 1.2 (or the shiny new TLS 1.3). And unbelievably, those that don’t enable HTTPS at all.

There’s more signs of stagnation if you know what to look for; lack of HTTP/2, lack of IPv6, long TTLs on DNS records, etc, that all indicate organisations that are stifled, or don’t have capability to understand what they are doing. Sometimes its corporate direction to use 3rd party IT operations who again, use the cheapest unskilled and unqualified labour to delivery IT services, dressed up in marketing to make it look like they save the earth.

If you’re affected by this, consider attending Nephology’s Web Security training.