Pretty easy to see: time flies pretty quickly if you’re doing what you love. Cloud has been such a change to the IT service delivery industry. For those in the AWS ecosystem, there’s a group of senior experts in the partner (professional & managed services) community, there’s some telling numbers in the statistics, when looking at the AWS Partner Ambassadors.

Universally seen as the original program for the expert engineers in the AWS partners in Australia and New Zealand was called the Cloud Warrior program; this morphed into the Partner Ambassador program in 2017.

Formally, this pre-dates the AWS Community Heroes program by several years.

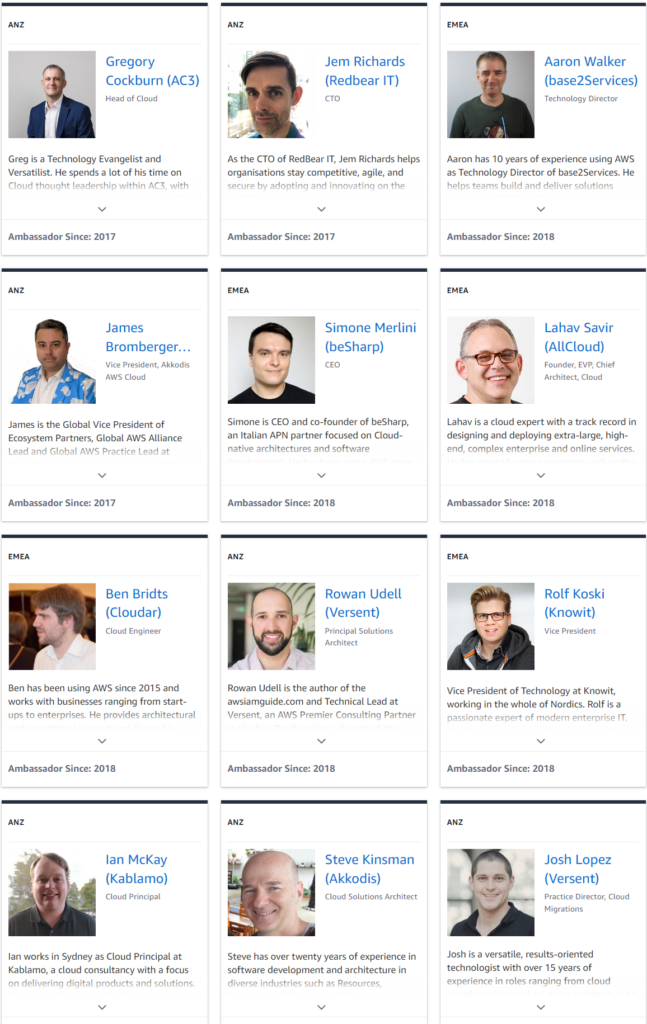

For today, you can see the current participants of the Ambassador program here. And when sorted by date (oldest to newest), on the first page you can see some of the long term experts in this group:

Original AWS Partner Ambassadors

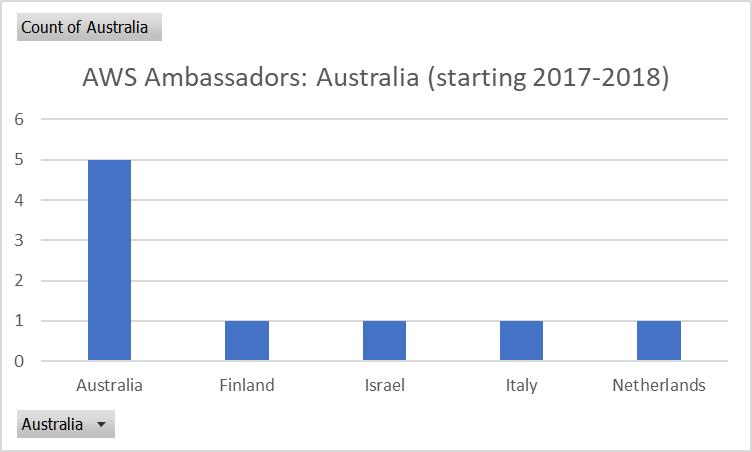

Perusing the participants of this program sorted by date, who in 2024 are still there, we notice that the Australian Ambassadors are still prevalent:

Country of the original and still active AWS Ambassadors, at April 2024

Incidentally, there are three listed with a start date of 2017: Greg Cockburn, Jem Richards and myself — that’s 7 years! And if you expand the view of older Ambassadors to those that joined in 2017 or 2018 and are still active, you see the majority are also from Australia. That’s the core of the AmbassadAussies.

Et ansi? (So what?)

Cloud has a deep history in Australia now, and Australia has a rich history of adopting new technologies and technical expertise. It’s a country where many new technologies are tested, before being “reinvented” in the European or US Markets.

Even though these individuals may work for different organisations during the day, but as engineers, we’re also esteemed peers and friends. We’ve all crossed paths many times in the IT industry over the last few decades.

Greg Cockburn & James Bromberger in Sydney, 2024

Helpfully we have seen some of the Ambassadors having written books, many have written blog posts and articles that have helped guide the industry into the secure and reliable use of AWS Cloud.

All of this helps give knowledge and confidence in to the industry. While my favourite topics are the continuing roll out of IPv6, ever increasing security controls, stronger crypto options, and better managed technical services, the Ambassador group covers nearly all topics, at a level that helps advance the state of the AWS Cloud. And as a community its key to embrace all. No one company has a monopoly on good ideas.