I’ve been working on a significant public-sector AWS cloud deployment (as previously mentioned) and surfed our way confidently through the June 2016 storms with a then 2-Availability-Zone architecture, complete with AWS RDS Multi-AZ, ELB and AutoScale services making the incurred AZ failure almost transparent.

However, not resting on ones laurels, I’ve been looking at what further innovations are in place, and what impact these would have.

Earlier this year, AWS introduced a third AZ in Sydney. This has meant that a set of higher order AWS services have now appeared that themselves depend upon 3 AZs. This also gives us a chance to — optionally — spread ourselves wider across more AZs. I’ve been evaluating this for some time, and came to the following considerations and conclusions.

RDS Multi-AZ during an AZ outage

Multi-AZ was definitely failing-over and doing its thing in June, but for the following 6-8 hours or so the failed AZ stayed off-line, along with my configured VPC subnets in that AZ that were members of my RDS DB Subnet Group. This meant that during this period I didn’t have multi-AZ synchronous protection.

Sure, this returned automatically when the AZ came back online but I thought about this small window a fair bit.

While AWS deploys its AZs on separate flood plains and power distribution grids, the same storm is passing over many parts of the Region at one time, so there’s a possibility that lightning could strike twice.

In order to alleviate this, I added a third subnet from a third AZ to my DB Subnet Group. Multi-AZ RDS only (at this time) has one replica. During a single AZ failure event, RDS would still have a choice of two operating AZs to provide me with the same level of protection.

The data in my relational database is important, and the configuration to add a third Subnet in AZ C has no real financial overhead (just inter-AZ traffic at around US1c/GB for SQL traffic). Thus the change is mostly configuration.

I thought about not having three AZs for my RDS instance(s), and considered what I would say to my customer if there was a double failure and I didn’t move to this solution. I was picturing the reaction I would get when I tell them it was mostly just a configuration change that could have helped protect against a second failure after an AZ outage.

That was a potential conversation that I didn’t want to have! Time to innovate.

EC2 AutoScale during an AZ outage

I then through about what happens to my auto-scale groups the moment an AZ goes dark. Correctly it reacts, and tries to recover capacity into the surviving AZ(s) that the ASG is configured for. In the case where I have two AZs and two instances (one per AZ normally), then I have lost 50% of my capacity. To return to my minimum configuration (two instances) I need ASG to launch from only the one surviving AZ.

Me, and everyone else who is still configured for the original two AZs in the Region. Meanwhile a third AZ is sitting there, possibly idle, just not configured to be in use.

If I had three AZs configured, and my set of ASGs randomly populating two instances across these three AZs, then I would have 1/3rd of my ASGs with no instances a failing AZ. So my immediate demand from this failure has decreased: I would require replacing just 33% of my fleet. Furthermore, I am not constrained to one AZ to satisfy my (reduced) demand.

Migrating from 2 AZ to 3 AZ

So I had a VPC, created from a CloudFormation template. It was reasonably simple, as when starting out on deploying a reasonably sized cloud-native workload, we had no idea what it would look like before we started it. Here’s a summary:

| Purpose | AZ A | AZ B | Total IPs (approx) |

|---|---|---|---|

| Internal ELBs | 10.x.0.0/24 | 10.x.1.0/24 | 500 (/23) |

| App Servers | 10.x.2.0/24 | 10.x.3.0/24 | 500 (/23) |

| Backend Services | 10.x.4.0/24 | 10.x.5.0/24 | 500 (/23) |

| Databases | 10.x.6.0/24 | 10.x.7.0/24 | 500 (/23) |

| Misc | 10.x.8.0/24 | 10.x.9.0/24 | 500 (/23) |

My entire VPC design was a /20 constraint (some 4000 IPs), designated from a corporate topology. Hence using a /24 as a subnet, we would have 16 subnets possible in the VPC.

On-premise firewalls (connected by both Direct Connect and VPN) would permit access from on-premise to specific subnets, and from in-cloud subnets to specific on-premise destinations.

It became clear as our architecture evolved, that having some 500 IPs for what ended up being few Multi-AZ databases (plus a few read replicas) was probably overkill. we also didn’t need 500 addresses for miscellaneous instances and services – again overkill that’s only appreciated with 20/20 hindsight.

Moving Instances

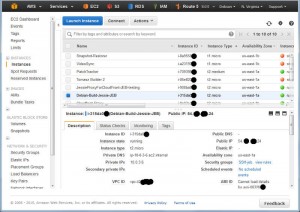

This workload was live, so there’s no chance of any extended downtime. So we refer to the rules of the VPC: you can only delete a VPC if there are no interfaces present in it. Thus we have to look at ENIs, and see where they are.

Elastic Network Interfaces are visible in the AWS console and CLI. You’ll note that Instance, ELBs, RDS instances all have ENIs. Anything that is “in” the VPC likely has them. So we need to jostle these around in order to reallocate.

Lets look at the first pair of subnets for the ELBs. I want to spread this same allocation across three AZs. three is an unfortunate number, as splitting subnets doesn’t nicely go into threes. However, four is a good number. Taking the existing pair of /24 networks (a contiguous /23), we would re-distribute this as 4 /25 networks (120 IP addresses apiece). I only have 40 internal ELBs (TCP pass through), so three’s enough room there. This would leave me with a /25 unused – possibly spare should a 4th AZ every come along.

And thus it began. ELBs were updated to remove their nodes from AZ A. This meant that nodes in AZ A were out of service (ELBs must be present in the same AZ as the instances they are serving to). So at the same time, ASGs were updated to likewise vacate AZ A. ELB reacted by deploying two ENIs in AZ B. ASGs reacted by satisfying their minimum requirements all from subnets in AZ B.

While EC2 instances were quick to vacate AZ A, ELB took some time to do so. Partly this is because ELB uses DNS (with low TTLs), and needs to wait until a sufficient amount of time has past that most clients would have refreshed their cached lookups and discovered the node(s) of ELB only in AZ B. In my case (and in more than one occasion) the ELB got stuck shutting down its ENIs in AZ A.

A support call or two later, and the AZs were vacated (but we’re still up!).

At this stage, the template I used to create the VPCs was read for its first update in 18 months. One of the parameters to my VPC is the CIDR range it holds, so the update was going to be as simple as updating this ONLY for the now-vacant subnets.

However, there’s a catch. For some reason, CloudFormation wants to create NEW subnets before deleting old ones. I was taking my existing 10.x.0.0/24 and going to use 10.x.0.0/25 as the address space. However, since the new subnet was to be created before the old was deleted, this caused an address conflict, and the update safely rolled back (of course, this was learnt in lower environments, not production).

The solution was to stage a two-phase update to the CFN stack. The first update was to set a new temporary range that didn’t conflict – from the spare space in the VPC. Anything would be fine to use so long as (a) it was currently unused, and (b) it didn’t conflict with my final requirements.

So my first update was to set the ELB subnets in AZ A to 10.x.15.0/25, and a follow-up a few seconds later to 10.x.0.0/25. Similarly with the other subnets for App servers and back-end servers.

With these subnets redefined (new subnet IDs), we could reverse the earlier shuffle: defined ELBs back in to AZ A, then define ASGs to span the two AZs. next was the move to vacate AZ B. Just as with the ELBs when they left AZ A, three was a few hours wait for the ENIs to finally disappear.

However this time, I was moving from 10.x.1.0/24, to 10.x.0.128/25. This didn’t overlap, and wasn’t in use, so was a simple one step CloudFormation parameter update to apply.

Next was a template update (not just a parameter update) to define the subnets in AZ C, and provide their new CIDR allocations.

The final move here is to update the ELBs and ASGs to now use their third subnets.

Moving RDS

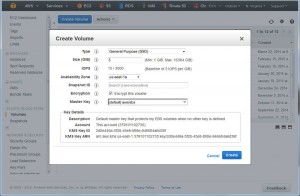

RDS Multi-AZ is a key feature underpinning the databases we use. In this mode, the ENIs for the master and the standby are in place from the moment that Multi-AZ is selected.

My first move was to force a fail-over of any RDS nodes active in AZ A. This is a reboot “with fail-over”, and incurs about a 3 minute outage. My app is durable to this, but its still done outside of peak service hours with notification to the client.

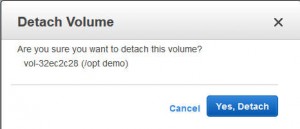

After failing over, we then temporarily modify the RDS instance to NOT be multi-AZ. Sure enough, the ENI from AZ A is duly removed, and the subnet when vacant can be replaced with the smaller allocation (in my case, a /26 per AZ suffices). With the replaced subnet created, I can then update my DB Subnet Group to include this new SubnetID, and re-enable Multi-AZ. Another reboot “with fail-over”, and convert again to Single AZ, and I can re-define the second subnet. Once more we update the DB Subnet Group again, and re-enable Multi-AZ.

The final chess move was to define the third subnet in the third AZ, and include that in the DB Subnet Group.

| Purpose | AZ A | AZ B | AZ C | ‘Spare’ | Total IPs (approx) |

|---|---|---|---|---|---|

| Internal ELBs | 10.x.0.0/25 | 10.x.0.128/25 | 10.x.1.0/25 | 10.x.1.128/25 | 500 (/23), only 370 available now |

| App Servers | 10.x.2.0/25 | 10.x.2.128/25 | 10.x.3.0/25 | 10.x.3.128/25 | 500 (/23), only 370 available now |

| Backend Services | 10.x.4.0/25 | 10.x.4.128/25 | 10.x.5.0/25 | 10.x.5.128/25 | 500 (/23), only 370 available now |

| Databases | 10.x.6.0/26 | 10.x.6.64/26 | 10.x.6.128/26 | 10.x.6.192/26 and 10.x.7.0/24 | 500 (/23), only 190 available now |

| Misc | 10.x.8.0/24 | 10.x.9.0/24 | – | – | Same, yet to be re-distributed |

Things you can’t easily move

What I found was there are a few resources that once created, actually require deletion. WorkSpaces and Directory services were two that, once present, aren’t currently easy to transfer between subnets. Technically instances aren’t transferable, but since I am in an ASG world (cattle, not pets), I can terminate and instantiate at will.

Closing Thoughts

With spare addressing space available for a fourth subnet, I don’t think I’m going to have to re-organise for a while. My CIDR ranges are still consistent with their original purposes. I have plenty of addressing space to define more subnets in future (perhaps a set of subnets for Lambda-in-VPC).

There’s other VPC improvements I’ve added at the same time, but I’ll save those for my next post.

#TemplateAllTheThings

Note: I also run some of the most advanced security and operation training on AWS. See https://nephology.net.au/ for information.