This is going to be a long week of learning how the world has changed. I’m already tired, and I’m not even there. My brain hurts (you’d not believe how many typos I am correcting here).

While (once again) I am not at Re:Invent in Las Vegas, Nevada, I’m tuned in to as many news sources as possible to try and catch what parts of the undifferentiated heavy lifting has changed. I’ve been one of the AWS Cloud Warriors for the last two years (2017-2018), which has been lucky enough for me to be given a conference ticket, but unfortunately I’ve not been able to get there.

While I may not be physically there, I am in spirit, having been nominated as one of the AWS Ambassadors.

However the live stream video (which has improved dramatically since 2014), the Tweets from various people, the updates on LinkedIn, RSS feeds, Release Notes, What’s New page, AWS Blog (hi Jeff), and indeed, the Recent Changes/Release History sections of lots of the documentation pages (such as this Release History page for CloudFormation) have given me more information to trawl through.

It’s now Tuesday night in Perth, Western Australia, and day two of Re:Invent but its only 7am Tuesday morning in Las Vegas (yes, I’m 16 hours in the future). Here’s my thoughts on the releases thus far:

100 GB/s networking in VPC

The ENA network interface was previously limited to 25 Gb/sec per instance on the largest instance types. Indeed, its worth noting that most network resources are limited to some degree by the instance size within an instance family. But now a new family – the C5n instances – have interfaces capable of up to 100 Gb/sec (that’s 12.6 GB/s – little b is bits, big B is bytes).

Much has been said about network throughput, and the comparison between ENA and SR-IOV in the AWS Cloud, and comparisons to other Cloud environments. 100 Gb/s now sets a new high bar that other vendors are yet to reach.

While its wonderful to have that level of throughput, its also worth noting that scale-out is still sometimes a good idea. 100 instances at 1 GB/s each may provide a better solution sometimes, but then again sometimes a problem doesn’t split nicely between multiple server instances. YMMV.

Transit gateway

Managing an enterprise within AWS usually a case of managing multiple AWS accounts. The ultimate in separation from a console/account level sometimes reverts to integration questions around network, governance and other considerations.

In March 2014 (yes, 4 and a half years ago), VPC service team introduced VPC Peering, a non-transitive peering arrangement between VPCs – non-throttling, no single point of failure way of meshing two separate VPCs together (including in separate accounts).

This announcement now gives a transitive way (hence the name) of meshing a spread enterprise deployment. There’s multiple reasons for doing so:

- Compliance: all outbound (to Internet) traffic is deemed by your corporate policy to funnel via a centralised specific gateways.

- Management overhead: organising N-VPCs to mesh together means creating (N-1)*N/2 peering arrangements, and double that number for routing table entries. If we have 4 environments (dev, test, UAT and Production), and 10 applications in their own environments, then that’s 40 VPCs, and 780 peering relationships and 1560 routing table updates.

Its worth noting that in some organisations, an accounts administrative users may themselves not have access to create an IGW for access to the internet; a Transit gateway may be the only way permitted for connectivity so it can be centrally managed.

But in taking central management, you now have a few considerations:

Blast radius. If you stuff up the Transit gateway configuration, you take down the organisation. With separation and peering, each VPC is its own blast radius.

- Cost: Transit gateway isn’t free. You probably want to permit S3 Endpoints for large volume object storage

- Throughput: 50 Gb/s may seem a lot, but now there are 100 Gb/s instances

ARM based A1 Instances

In 2013, when I worked at AWS, I spoke with friends at ARM and AWS Service teams about the possibility of this happening. The attractiveness of the reduced power envelope, and cost comparison of the chip itself made it already look compelling then. This was before Windows was compiled to ARM – and that support is only strengthening. Its heartening now to see this coming out the door, giving customers choice.

Earlier this month we saw an announcement about AMD CPUs. Now we have three CPU manufacturers to choose from in the cloud when looking to run Virtual Machines. Customers can now vote with their workloads as to what they want to use. The CPU manufacturers now have more reason to innovate and make better, faster or cheaper CPUs available. When you can switch platforms easily (you do DevOps, right? All scripted installs?) then its perhaps down to the cost question now.

Now, recently it was announced there will be a t3a. Wonder if there will be a t3a1?

Compute in the cloud just got even more commodity. Simon Wardley, fire up your maps.

S3 improvements (lots here)

Gosh, so much here already.

Firstly, an admission that AWS Glacier is no longer its own service, but folded under S3 and renamed as S3 Glacier. There’s a new API for glacier to make it easier to work with, and the ability to put objects to S3 and have them stored immediately as Glacier objects without having to have zero day archive Lifecycle policies.

SFTP transfers – finally, a commodity protocol for file uploads that simple integrators can use, without having to deploy your own maintained, patched, fault-tolerant, scalable ingestion fleet of servers. This right here is the definition of undifferentiated heavy lifting being simplified, but with a price of 30c/hour, you’re looking at US$216 before you include any data transfer charges.

Object Lock: the ability to put files and not be able to delete them for a period. For when you have strict compliance requirements. Currently can only be defined on a Bucket during Bucket creation.

S3 events seem to have got a lot more detailed as well, with more trigger types than can be sent to SQS, SNS, or straight to a Lambda function.

KMS with dedicated HSM storage

KMS has simplified the way that key management is done, but some organisations require a dedicated HSM for compliance reasons. Now you can tell KSM to use your custom key store (a single-tenneted CloudHSM devices in our VPC) as the storage for these keys, but still use KMS APIs for your own key interaction, and use those keys for your services.

A dedicated Security Conference

Boston, End of June. Two days.

Not so new (but really recent)

CLI Version 2

Something so critical – the CLI – used by so many poor-man (poor-person) integrations and CI/CD pipelines, now with a version 2 in the works. Its breaking changes time – but in the mean time, the v1 CLI continues to get updates.

Predictive AutoScaling

Having EC2 AutoScaling reactively scale when thresholds are breached has been great, but combining that with machine learning based upon previous scaling events to make predictive scaling is next-level .

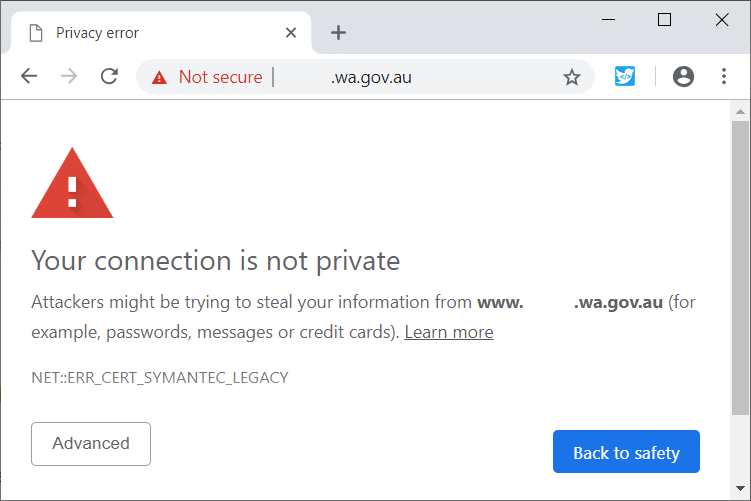

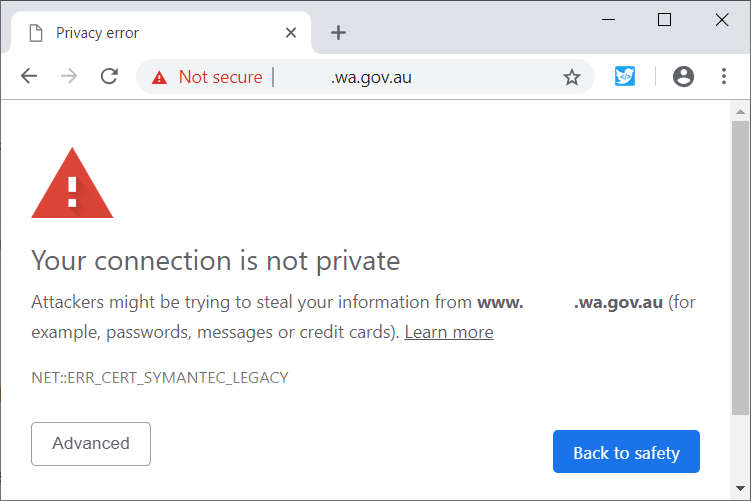

Lambda Support for Python 3.7

You may initially think this is trivial, stepping up from Python 3.6 to Lambda with Python 3.7, but it means that Python Lambda code can now make TLS 1.3 requests. Updating from Python 3.6 to 3.7 is mostly trivial; from 2.7 to 3.x normally means re-factoring liburi/requests client libraries and liberal use of parentheses where previously they weren’t required (eg, for print()).

S3: Public Access Blocking

Block Public Access finally removes the need for custom Bucket policies to prevent accidental uploads with acl:public (which, when you’re using a 3rd party s3 client for which you can’t see or control the ACL used may be scary). The downfall of the previous policies that rejected uploads if ACL:public (or not acl:private) was used is that it interfered with the ability to do multi-part puts (different API).

There’s been way too many cases of customers leaving objects publicly accessible. This will become a critical control in future. Most organisations don’t want public access to S3: those that do want public, anonymous access probably should be using CloudFront to do so (and a CloudFront origin Access identity for this as well, with Lambda@Edge to handle auto indexing and trailing slash redirects).

DynamoDB: Encrypted by default

A big step up. In reality, the ‘encryption at rest’ scenario within AWS is a formality: as one of the few people in Australia who has actually been inside a US-East-1 facility (hey QuinnyPig, I recall that from your slide two weeks ago at Latency Conf) the physical security is superb; the separation of responsibility between the logical allocation of data, and the knowledge of the physical location are separate teams.

So given that someone in the facility doesn’t know where your data is, and someone who knows where it is doesn’t have physical access (and those with physical access cant smuggle storage devices in or out), we’re at a high bar (physical devices only leave facilities when crushed into a very fine powder, particularly for SSD based storage).

So the Encrypted At Rest capability is more a nice to have – an extra protection should the standard storage wiping techniques (already very robust) have an issue. But given the bulk of the AES algorithm has been in CPU extensions for years, the overhead of processing encryption is essentially no impact.

Summary

I’ve tried my best to stay aware of so much, but the last 24 months has stretched the definition of what Cloud is so very wide. IoT, Robotics, Machine Learning, Vision Processing, Connect, Alexa, Analytics, DeepLens, this list seems so wide before you dive deep to the details. And the existing stalwarts: Ec2, S3, SQS, and even VPC keep getting richer, and richer.

The above is the services I’ve been interested in – there is definitely a hell of a lot more in the last 24 hours as well.

What’s today (US time) going to bring? I need to get some sleep, because this is exhausting just trying to keep the brain up to date.