I have been tinkering with AWS since 2008, and delivering AWS Cloud solutions since 2010, and in this time, I’ve seen many cloud trends come along, and messaging subtly change from all of the hyperscale IaaS and PaaS providers.

This year, we’re seeing more talk about Optimisation. In the 2023 earnings calls, we heard:

- Microsoft: “Customers continued to exercise some caution as optimization … trends … continued”

- Google: “slower growth of consumption as customers optimized GCP costs reflecting the macro backdrop”

- AWS: “Customers continue to evaluate ways to optimize their cloud spending in response to these tough economic conditions.”

We have also seen the messaging around Migration to cloud evolve to “Migrate & Modernise”. This is putting pressure on the laziest, simplest, and least effective of the “Seven R’s of cloud migration”, being “Rehost” and “Relocate”.

Why is this?

Rehost takes the existing spaghetti of installed software, and runs it in exactly the same way on a hyperscale cloud providers concept of a virtual machine. And in true least effort approach, if you had a virtual machine with 64 GB of RAM previously (even if only 20% utilised), then you would select the closest match in a simple rehost/reinstall.

If you had 10 application servers on 24×7, then you still get 10 cloud virtual machines, 24×7, even if you only need that peak capacity for one day of the year.

And even more interesting is paying licensing fees for a virtualisation layer you don’t need to be paying for (and get a different experience when you do).

Not very efficient. Not very smart. But least effort.

So why do organisations do this?

That is easy: It’s complicated to do well. It takes time, experience, and wisdom.

This technology industry is full of individuals who think their job is to make nothing change. Historically, many IT service frameworks were designed around slowing the rate of change, diametrically opposed to DevOps. And organisations that see the cost of technology operations, not the value of it.

Cost has been driven down so much that the talented individuals have left, and only those who remain (who perhaps couldn’t geta job elsewhere) are keeping the lights on. They don’t have the experience, knowledge or wisdom to know what good looks like, they just know what has, for the last 30 years, been “stable”.

And while some engineers are keen to learn new things, and use that, many senior stakeholders have in their mind “what’s the least we can do right now”.

So when it comes to a cloud migration, they see “least change” as “least risky”, not “more costly”.

In 2023 at the AWS Partner summit, AWS representatives quoted that “only some 15% of workloads that will move to the cloud, have now done so”. They furthered that that 15% now requires some level of modernisation.

Again, 10 years ago, many organisation moved large workloads in lift-and-shift method to the same number of instances of the day. Perhaps the m3.xlarge in AWS or similar elsewhere. And then the people who knew what Cloud was, departed the scene, leaving an under experienced set of individuals to keep the lights on and change nothing.

I once did a review of an 3 party Analytics package running on 4 m3.xlarge instances; they had acquired a 3 year Reservation, and kept the instances running 24×7. They didn’t use them all the time. They had never done any changes (not even OS patching). They were running a RedHat 7.x OS.

Two cycles (yes, 2) of newer instance families had been released in that period: the m4, and then m5. Because of the Linux kernel in that version of RedHat, they were prevented from moving these virtual machines to a newer AWS EC2 instance family due to lack of kernel support.

In reality, they were fortunate, as they had run a version of RedHat that finally (after 20+ years) supported in-place upgrades. The easiest path ahead was to snapshot (or make an AMI of each individual host), then in-place upgrade to the latest RedHat release, and then during a shutdown of the instance (stop), adjust the instance family to the m5 equivalent. This alone would save them something like 20% of cost. They could then do a Reservation (my recommendation was for one year, as things change…) and they would have ended up at over 80% cost reduction – and faster performance.

Of course, far cleaner is to understand the installation of the 3rd party analytics software, the way it clusters, and replace each node with a clean OS, instead of keeping the craft from bygone installations.

And beyond that would have been the option to not Reserve any instances, but just turn them off when not in use.

But they hadn’t.

Poor practices in basic maintenance and continual patching and upgrades meant they had a lot of work to do now, rather than already be up to date.

I’ve seen a number of Partners in the Cloud ecosystem get recognised and rewarded for the sheer volume of migrations they have done. And despite these being naive lift-and-shift, and looking good for the first 6 months, the bill shock starts to set in. And over time, the lack of maintenance becomes an issue.

Even with the “Re-platform” pattern of migration (adopting some cloud managed services in your tech stack), and adopting perhaps something like a Managed Database service, can have its catches. Selecting a managed version of perhaps Postgres 9.5 back 5 years ago, would have put you in trouble, when 9.6, 10, 11, 12, 13, 14 came out, because as newer versions came out, older versions were deprecated, and eventually unavailable. If you weren’t adequately addressing technical debt and maintenance tasks as part of your standard operations, then you’re looking at possible operational trouble.

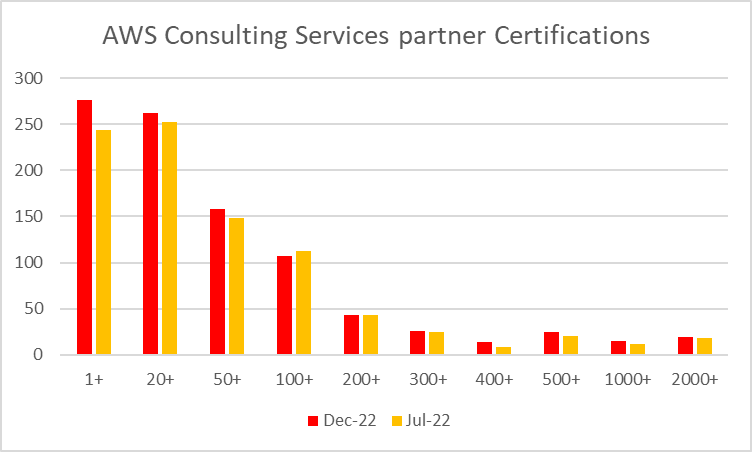

One clever tactic to address this is to have engineers who are familiar with more contemporary configurations, experts in the field (whom you can spot by the 6+ concurrent AWS Certification they hold) who can perform a Well-Architected Framework Review. This is typically short 1 week engagement for a professional consulting company, borrowing experienced talent into your business that can give you clear addressable mitigations covering many facets, including cost.

If you previously did a Lift & Shift, and found it expensive, perhaps your organisations ability to bring expertise to bear on the work is missing. If you don’t know where to start, then start asking for expertise.

So why are all the cloud providers talking about Optimisation? Because they know their customers can do better, on the same cloud provider. And they would rather have a customer optimise their spend and stay, rather than now migrate & modernise to a competitor, and start the training and upskilling process again.