I have spent many years working with Landgate, the state government Department of Land Administration. It’s a well known AWS Case Study, and a platform that is available for other land jurisdictions of the world if they wish to move to it.

One of the integrations that implemented is from/to the Electronic Lodgement Network Operators (ELNOs) to facilitate electronic settlement of property transactions, of which only one is currently active in Australia, Property Exchange Australia, otherwise known as PEXA.

Using PEXA saves settlement agencies, and banks from having to send representatives to a specific location at a specific time with the assortment of cheques, paperwork, and other administration that, should one thing be out of order, causes settlement to be delayed (a costly exercise). Many transactions types have now been mandated to be done via electronic interfaces, one of the first of which in Western Australia was the Discharge of Mortgage.

More than 80% of transactions on the land registry are a property being sold while under mortgage, to someone else who has also taken out a mortgage. This is called DTM; Discharge, Transfer, Mortgage. It’s one of the first transactions that the Advara platform automated the validation of data submitted, saving huge amounts of manual effort.

For a transaction submitted by PEXA, the general turn around time on data validation and transaction approval has now dropped to around 10.8 seconds, down from historical highs of ?30 days.

My Transaction

I was recently purchasing a new property (my home study is occupied by a rather adorable 5 year old girl, IMHO) and armed with the workings of the land titling system, I figured I’d actively watch my settlement transaction.

PEXA has created a user application called PEXA Key, for Android and iPhone, that permits sellers and purchasers to be invited to their property settlement transaction.

All a settlement agents needs to do is collect a mobile phone number and email address from the seller or purchaser, and enter into into the PEXA workspace.

I enquired about this to the real estate agent selling the property, and then in turn my settlement agent, and none of them had heard of this, much less actually done it. So I pushed on, and lo, managed to have them submit my details.

This post shows what happens next.

A Text Message

I received a text message almost immediately – with variables shown where real names were used:

Hi JAMES,

${SETTLEMENT_AGENT} has invited you to download the PEXA Key app to track your settlement. Check your email for more details. Get the app free here key.pexa.com.au/download or exclusively on Google Play or Apple App Store.

Text message I received after my settelment agent registered me in the workspace.

I quickly complied, and was then sent a security activation code.

The app then told me when my settlement had been scheduled for, and any pending tasks that I was responsible for (as it happened, I had already done everything, so it was fine).

This immediately gave me piece of mind, knowing the transaction workspace was set up and pending.

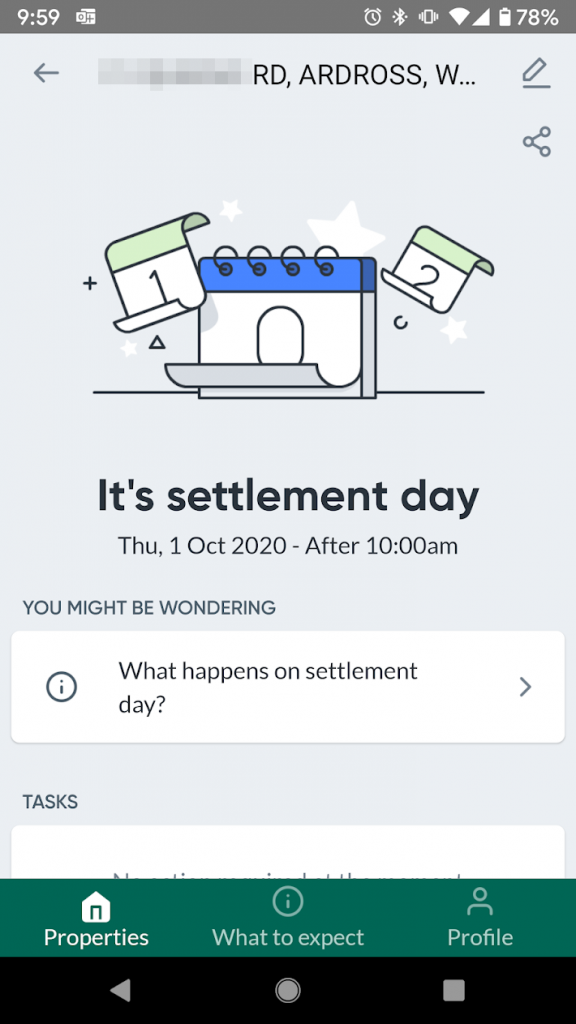

The morning of settlement came around, and I was greeted with this:

I nervously checked the application every few minutes to see what would happen next.

And so it begins

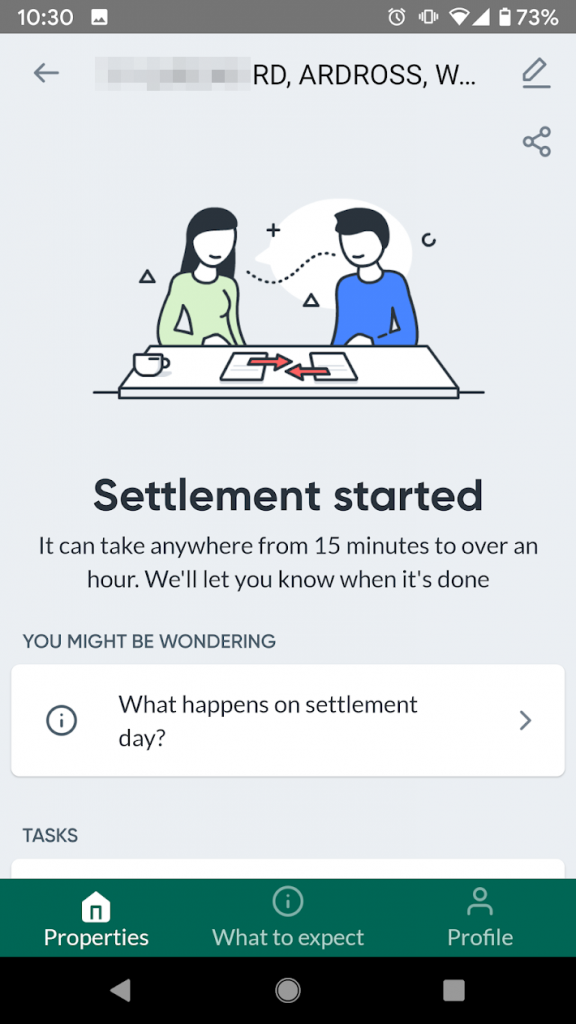

It turned out that the process was initiated around 10:25am or so, after which the PEXA Key application showed:

OK, strap in, the wheels are in motion.

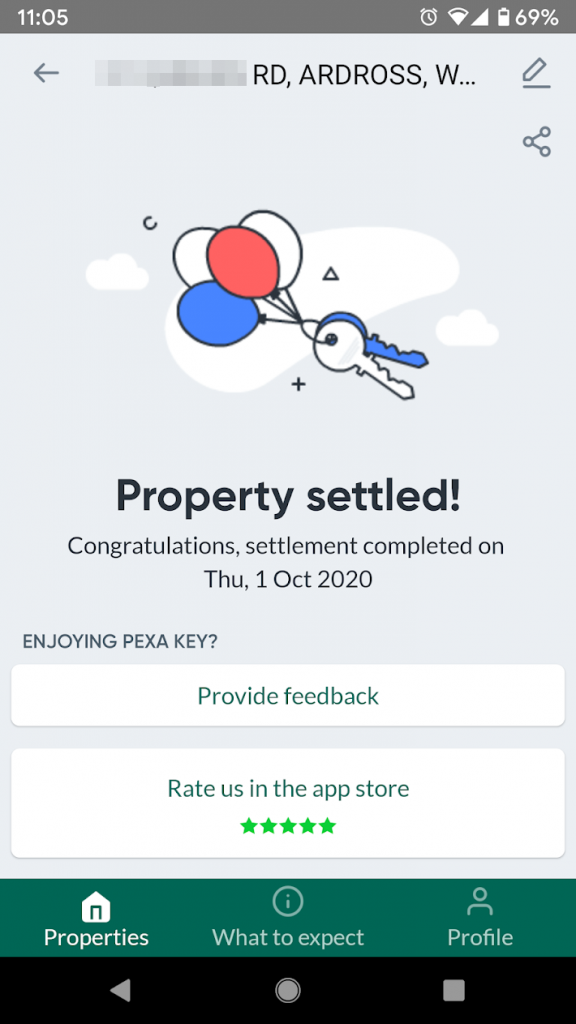

It took around 40 minutes until all was done and dusted, and the final result came through:

A few hours later, a new set of house keys were in my hand.

This has to be the most expensive testing I have ever personally done! 😉

The inclusion of the end customer in this process, which just simple visibility, is something that I think should be offered to all parties in the transaction to bring confidence and clarity in the progress or inhibitors in the transaction.

Key to this (pun intended, in two ways) is the swift and efficient recognition of land property transactions. My colleagues and I have worked hard to uplift the validation and security of the land registry system for years, and continue to do so. And as a customer of this system, it worked smoothly.

Some coverage of PEXA Key is here in Cyber Security Magazine (saying this stops an avenue of attack).

I recommend anyone buying or selling property to ask their agent to invite them into the settlement on PEXA using PEXA Key. As many in the real estate industry I have spoken to are unaware of this, you may need to explain this (send them this article’s URL), but its worth it.

Disclosure: I do not work for PEXA, nor have been asked by them (or anyone else) to write this. I share the above to assist anyone else who would like to see their property transactions being processed. While PEXA is a national (Australian) electronic settlement platform, the turn around time from each separate land jurisdiction to validate and register the transaction will vary. Indeed, I’d challenge any of them to beat 10.8 seconds full validation!