DNS is one of the last insecure protocols in use. Since 1983 it has helped identify resources on the Internet, with a name space and a hierarchy based upon a common agreed root.

Your local device – your laptop, your phone, your smart TV – whatever you’re using to read this article – typically has been configured with a local DNS resolver that, when your device needs to look up an address, it can ask the local resolver to go find the answer to a query.

The protocol used by your local device to the resolver, and from the resolver to reach out across the Internet, is an unencrypted protocol. It normally runs on UDP port 53, switching to TCP 53 under certain conditions.

There is no privacy across either your local network, or the wider Internet, of what records are being looked up or the responses coming back.

There’s also no validation that the response sent back to the Resolver IS the correct answer. And malicious actors may try to spuriously send invalid responses to your upstream resolver. For example, I could get my laptop on the same WiFi as you, and send UDP packets to the configured resolver telling it that the response to “www.bank.com” is my address, in order to get you to then connect to a fake service I am running, and try and get some data from you (like your username and password). Hopefully your bank is using HTTPS, and the certificate warning you would likely get would be enough to stop you from entering information that I would get.

The solution to this was to use digital signatures (not encryption) to have a verification of the DNS response received by the Upstream resolver from across the Internet. And thus DNSSEC was in born 1997 (23 years ago as at 2020).

The take up has been slow.

Part of this has been the need for each component of a DNS name – each zone – needing to deploy a DNSSEC-capable DNS server to generate the signatures, and then to have each domain be signed.

The public DNS root was signed in 2010, along with some of the original Top Level Domains. Today the Wikipedia page for the Internet TLDs shows a large number of them are signed and ready for their customers to have their DNS domains return DNSSEC results.

Since 2012 US Government agencies have been required by NIST to deploy DNSSEC, but most years agencies opt out of this. Its been too difficult, or the DNS software or service they are using to host their Domain does not support it.

Two parts to DNS SEC

One the one side, the operator of the zone being looked up (and their parent domain) all need to support and have established a chain-of-trust for DNSSEC. If you turn on DNSSEC for your authoritative domain, then those clients who are not validating the responses won’t see any difference.

Separately, the client side DNS Resolver (often deployed by your ISP, Telco, or network provider) needs to understand and validate the DNSSEC Response. If they turn on DNSSEC for your Resolver, then there’s no impact for resolving domains that don’t support DNSSEC.

Both of these need to be in place to offer some form of protection for DNS spoofing, cache poisoning or other attacks.

Route 53 Support for DNSSEC

In December 2020, Route53 finally announced support for DNSSEC, after many years and many customer requests. And this support comes in two ways.

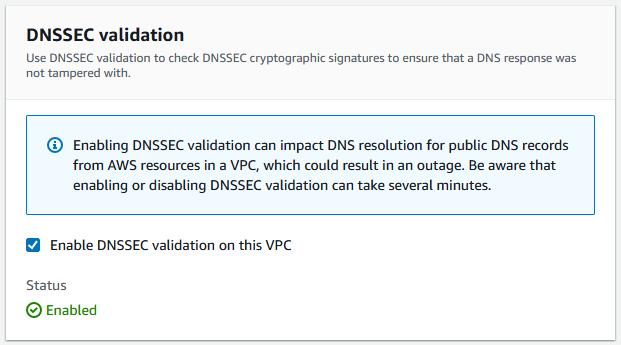

Firstly, there is now a tick box to enable the VPC-provided resolver to validate DNSSEC entries, if they are received. Its either on, or off at this stage.

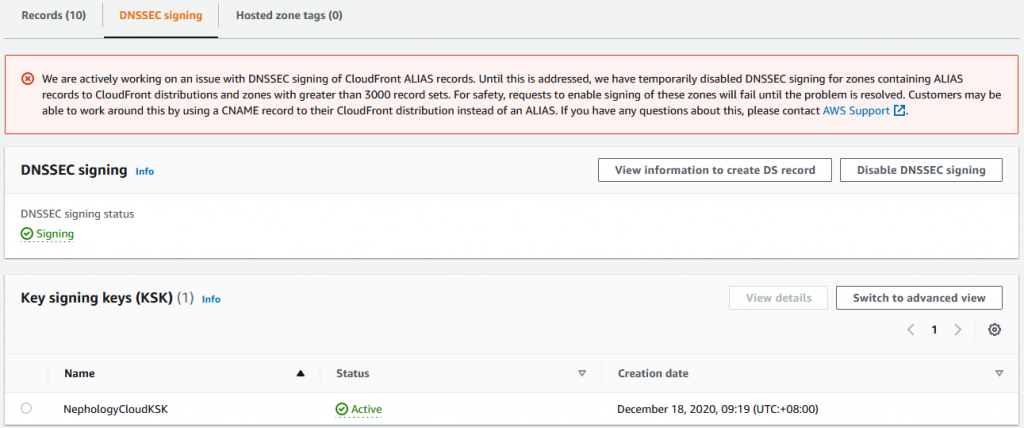

And separately, for hosted DNS Zones (your domains), you can now enable DNSSEC and have signed responses sent by Route53 for queries to your DNS entries, so they can be validated.

A significant caveat right now (Dec 2020) for hosted zones is that this doesn’t support the customer Route53 ALIAS record type, used for defining custom names for CloudFront Distributions.

DNSSEC Considerations: VPC Resolver

You probably should enable DNSSEC for your VPC resolvers, particularly if you want additional verification that you aren’t being spoofed. There appears to be no additional cost for this, so the only consideration is why not?

The largest risk comes from misconfiguration of the domain names that you are looking up.

In January 2018, the US Government had a shut down due to blocked legislation. Staff walked off the job, and for some of those agencies, they had DNS SEC Deployed – and for at least one of those agencies, its DNS keys expired, rendering their entire domain off-line (many other let their web site TLS certificates expire, causing warnings for browsers, but email still worked for them for example).

So, you should weigh up the improvement in security posture, versus the risk of an interruption through misconfiguration.

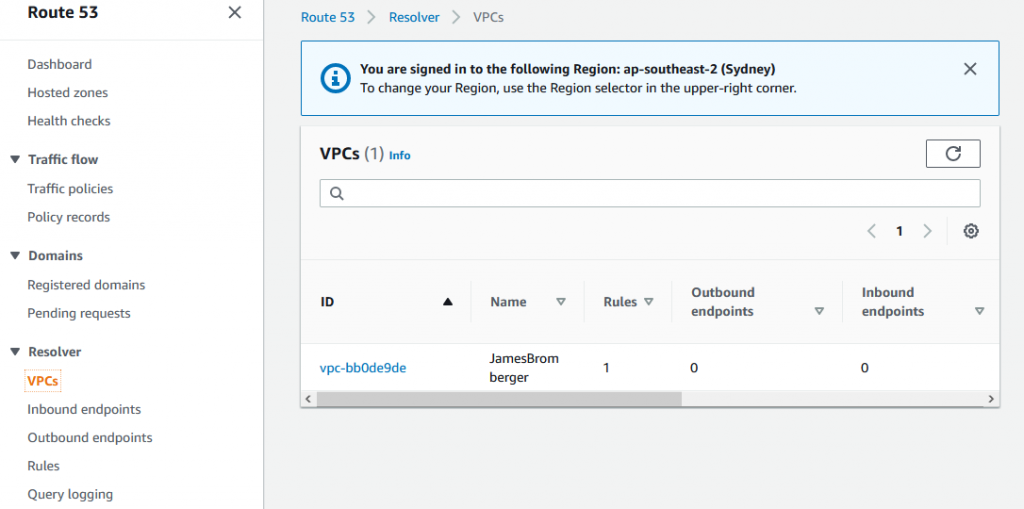

In order to enable it, go to the Route53 Console, and navigate to Resolvers -> VPCs.

ChoOse the VPC Resolver, and scroll to the bottom of the page where you’ll see the below check box.

DNSSEC Considerations: Your Hosted Zones

As a managed service, Route53 normally handles all maintenance and operational activities for you. Serving your records with DNSSEC at least gives your customers the opportunity to validate responses (as they enable their validation).

I’d suggest that this is a good thing. However, with the caveat around CloudFront ALIAS records right now, I am choosing not to rush to production hosted zones today, but staying on my non-production and non-mission critical zones.

I have always said that your non-production environments should be a leading indicator of the security that will get to production (at some stage), so this approach aligns with this.

The long term impact of Route53 DNSSEC

Route5 is a strategic service that enables customers to not need their own allocate fixed address space and run their own DNS servers (many of which never receive enough security maintenance and updates). With DNSSEC support this means that barriers for adoption are reduced, and indeed, I feel we’ll see an up-tick in DNSSEC deployment worldwide because of this capability coming to Route53.

Other Approaches

An alternate security mechanism being tested now is called DNS over HTTPS, or -DoH. This encrypts the DNS names being requested from the local network provider (they still see the IP addresses being accessed).

In corporate settings, DoH is frowned upon, as many corporate It departments want to inspect and protect staff by blocking certain content at the DNS level (eg, block all lookups for betting sites) – and hiding this in DoH may prevent this.

In the end, a resolver somewhere knows which client looked up what address.