I’ve been lampooning around in a very cloudy jacket for the last few months at various events, and people have asked “Why?”. In response, here is a tech run-down of… me (of course, see LinkedIn profile as well)…

Continue reading “Why the Cloud Jacket?”Author: james

AWS Public Sector Summit, Canberra 2019

It’s been a reasonably busy few weeks for me; here’s a recount of the AWS Public Sector Summit in Canberra…

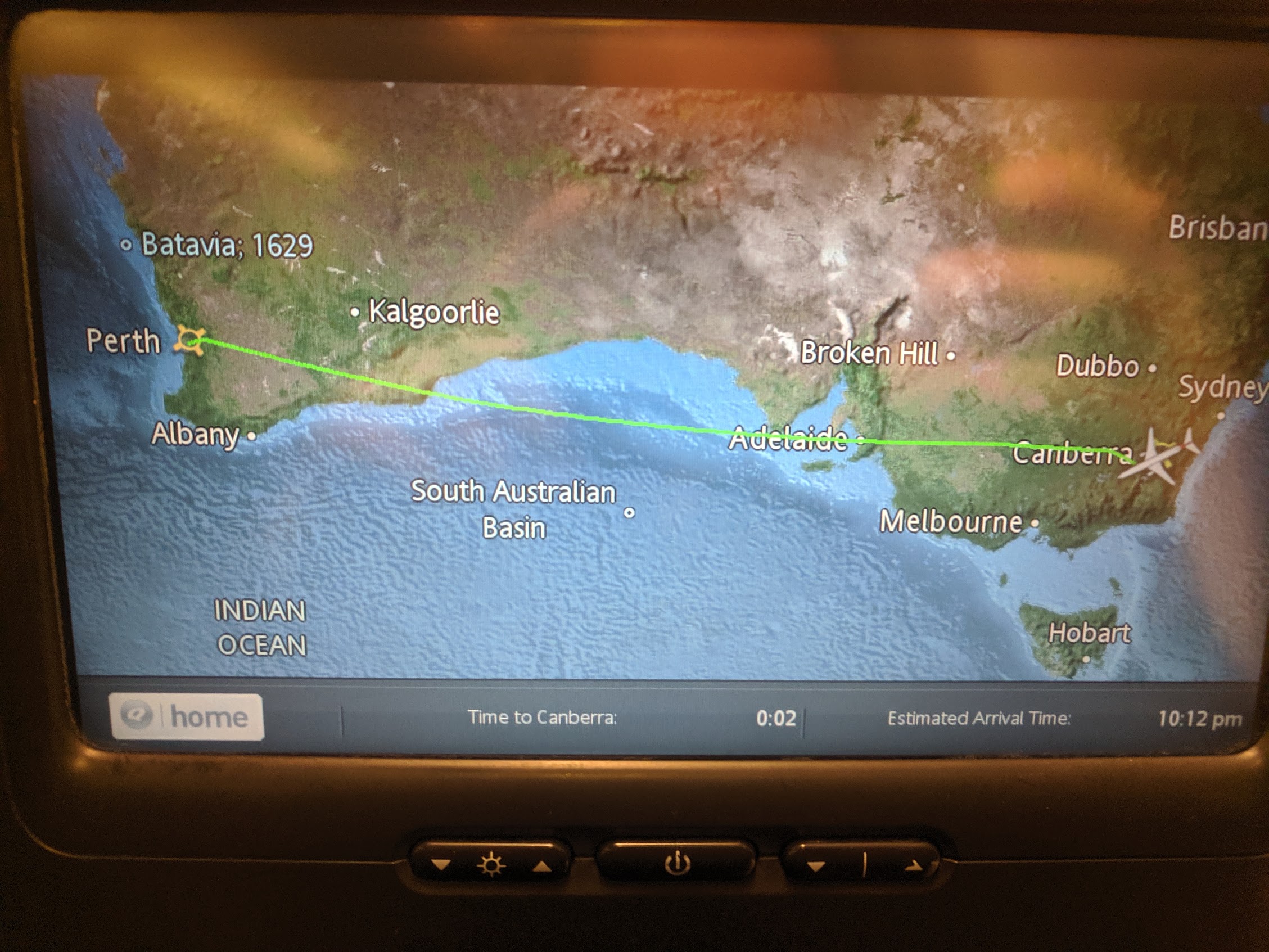

PER to CBR: 4 hours, 3,700 kms (2,300 miles for the old world)

On Monday 19th July, I went to Canberra for the AWS Public Sector summit, held at the National Convention Centre, with some 1,200 people in attendance this time. I recall the first AWS Canberra Public Sector Summit of 2013, with a few hundred going to the Realm Hotel: NCC is now starting to look reasonably full.

It’s always nice running into old friends, and this time, long time Linux.conf.au and Australian Open Source community personality Michael Still. Michael ran LCA 2013 in Canberra, when Sir Tim Berners-Lee was one of the keynotes (and Bunnie Huang, Bdale, and Radia Perlman). I helped the video team that year – and recall chatting with Robert Llewellyn…

Later, I ran into Matt Fitzgerald, whom I first met when I worked for AWS – and was the only other person at that time (circa 2013) from Perth in Seattle with AWS.

Of course, multiple current and former colleagues, other AWS Ambassadors from the region, other folk in the cloud space with other vendors.

And then, in the foyer while chatting, I suddenly find Pia, well known for her work inside the halls of government from Australia to New Zealand, but 17 years ago, helping establish the fledgling Linux.conf.au conference and helping the Australian open source community find its platform and voice.

Of course, its not all about catching up with friends.

The masses packed into the main theatre to hear the set of lighthouse case studies, new capabilities, and opportunities that can be reached on the AWS platform.

This time, the baton of AWS PS Country Manager and MC responsibilities had passed to Iain Rouse, formerly of Technology One. Modis has been an AWS partner since 2013 (as former brand Ajilon), with many Public Sector customers since then, it was nice to see our logo amongst a healthy ecosystem of capability.

Even nicer than seeing our logo, is our customers and those I have worked with. At the first PS Summit in 2013, I asked and had ICRAR attend; I used to work for UWA (as chief webmaster in the last millennium); when I was at AWS I worked with CFS SA and Moodle, and of course, Landgate – which is now over four years of running on the AWS Cloud.

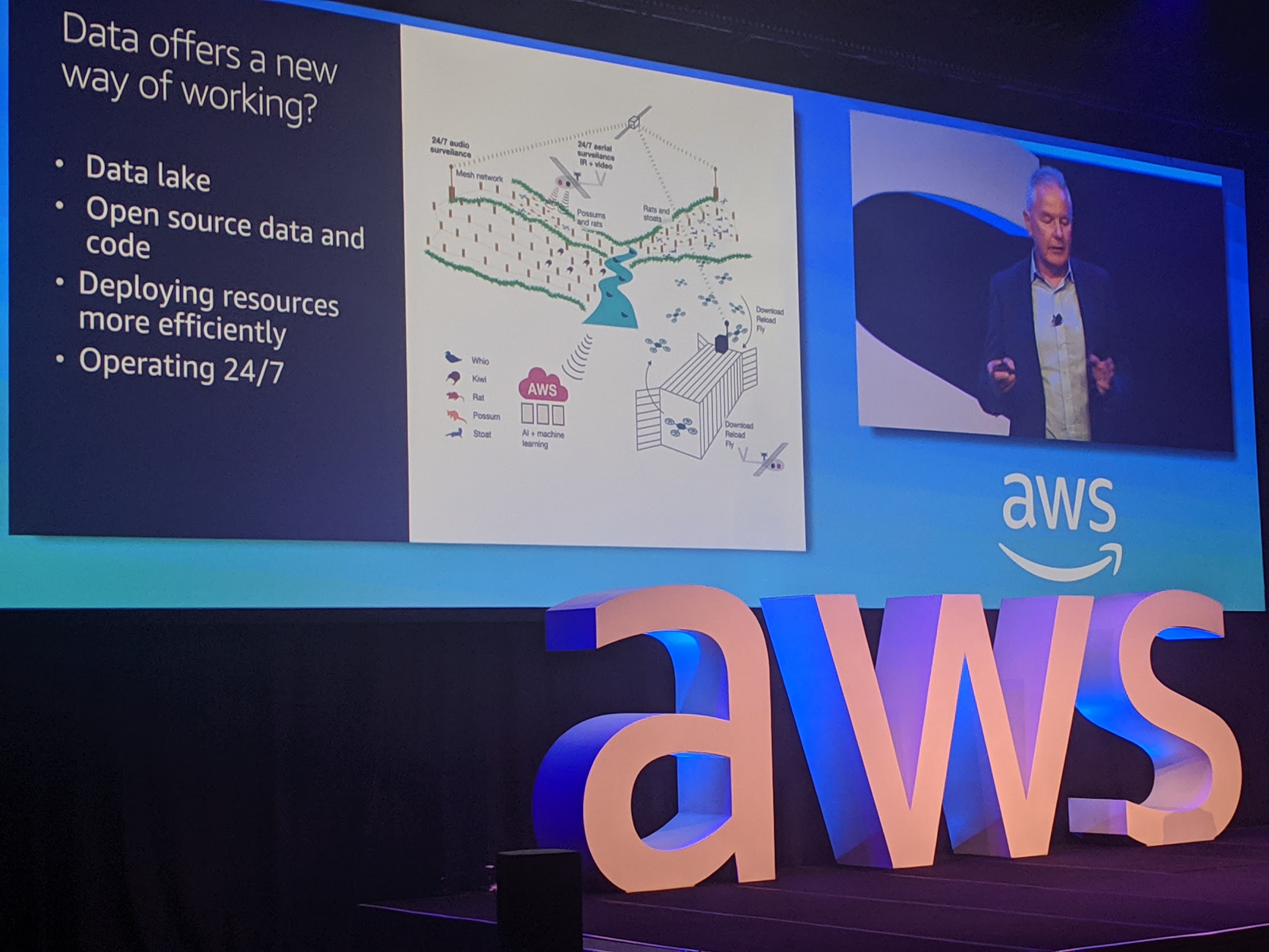

New Zealand’s Conservation’s CIO, Mike Edginton spoke of the digital twinning they have been doing for the environments that their endangered species are in, and of having to set traps for introduced species but IoT enabling them. They cover a vast area of NZ, but the collection of data and analytics and visualisation makes their management more efficient. They’ve also managed to decode Kiwi calls (the bird, not the people).

Former colleague Simon Elisha continued with a strong positioning of the further efforts around the efforts that the AWS engineering teams have been deploying on resilience, multi-layered security, hardware design, physical security, video CCTV archiving; and then into the customer accessible security services for Data Protection, Identity Directory & Access, Detective Controls & Management, and Networking & Infrastructure.

He then dived into a customer controlled capability for S3 (Object Storage) that was surfaced at the global re:Invent service in 2018: Block Public Access. This capability can be leveraged at a per-bucket level, as well as at an AWS-account-wide level (which would be effective for any new S3 Buckets created, regardless of their per-bucket settings)

S3 has been around for many years, and has expanded from a small set of micro services, to over 200 today (as disclosed at AWS Sydney Summit 2019). It can by itself act as a public web server for the content in a bucket; can have public anonymous access.; can encrypt in flight and at rest; storage tiering; life-cycle, logging, and much more. These days, I don’t encouraged teams to serve content to the web directly via S3, but via the CloudFront global CDN (today: 189 points of presence – see this). And with the ability for CloudFront to access S3 buckets using an Origin Access identity, its possible to remove all anonymous access from S3, and enable the Block Public Access – something we have done for many of our customers. This pattern forces that access to the data from the Internet will come from an endpoint set to my desired TLS policy, with a custom named TLS Certificate, and with a bonus, I can set (inject) my specific security headers on the content being served. For example, check out securityheaders.com (hi Scott) and test www.advara.com.

Simon also spoke about the technology stack (not quite the full OSI stack, for those that recall):

- Physical Layer: secure facilities with optical encryption using AES 256

- Data Link Layer: MACsec IEE 802.1AE

- Network Layer: VPN, Peering

- Transport Layer: s2n, NLB-TLS, ALB, CloudFront and ACM

- Application Layer: Crypto SDK, Server Side Encryption

After a quick tour of Security Hub, and then Ian speaking about some of the training and reskilling initiatives, it was time for another customer.

This was the second time I had seen this, with the drone having been shown at the AWS Commercial Summit in Sydney in July. However, Dr Scully-Power’s presentation was, to be honest, very powerful. Watch the video and hear for yourself about rescuing kids from rips, spotting sharks, crocs and more.

The AWS DeepRacer (reinforcement learning autonomous vehicles) was set up and competing again, part of the effort to lower the barrier of entry for customer into machine learning. The exhibitor hall continued to have technology and consulting partners showcasing their achievements and capabilities, as well as the various AWS customer-facing teams such as the certification teams, concierge team, Solution Architects (now split further by services and specialisations).

In the break-out sessions (actually held on the Tuesday), was a track dedicated to Healthcare, a track for High Performance Compute, and more. Presentations for the fledgling Australian space community (see Ground Station), decoupling workloads, connectivity, etc.

Once again a group of local school children were given the opportunity to attend and see the innovation being discussed, and a stream of activities aimed at helping show them career pathways.

Of course, in specific break out streams were media analyst briefings, executive briefings, Public Sector partner forums and workshops.

I also had the opportunity to stop by the Modis Canberra office, and with Mark Smith (with whom I have worked for nearly half a decade) and I spoke at length to the local team on the challenges and successes of our engagements with customers, delivering advanced, managed Cloud services and solutions.

That night, I returned to Perth for a day at work and a few hours with my family… before heading for the next adventure, the AWS Ambassador Global meetup in Seattle (next post).

AWS: Save up to 19.2% on t* instances

Despite what AWS may say, the burstable CPUs are a workhorse for so many smaller workloads – the long tail of deployments in the cloud.

Yesterday saw the announcement of the AMD based T3a instance family as generally available in many regions. Memory and core-count matches the previous T3 and T2 instance families of the same size, which makes comparisons rather easy.

Below are prices as shown today (25/Apr/2019) for Sydney ap-southeast-2:

| Size | t2 US$ | t3 US$ | t3a US$ | Diff t3a-t3 | % | Diff t3a-t2 | % |

| nano | .0073 | .0066 | .0059 | .0007 | 10.6 | .0014 | 19.2 |

| micro | .0146 | .0132 | .0118 | .0014 | 10.6 | .0028 | 19.2 |

| small | .0292 | .0264 | .0236 | .0028 | 10.6 | .0056 | 19.2 |

| medium | .0584 | .0528 | .0472 | .0056 | 10.6 | .0112 | 19.2 |

| large | .1168 | .1056 | .0944 | .0112 | 10.6 | .0224 | 19.2 |

| xlarge | .2336 | .2112 | .1888 | .0224 | 10.6 | .0448 | 19.2 |

| 2xlarge | .4672 | .4224 | .3776 | .0448 | 10.6 | .0896 | 19.2 |

As you can see, the savings of moving from one older family to the next is consistent across the sizes: 10.6% saving for the minor t3 to t3a equivalent, but a larger 19.2% if you’re still back on t2.

It’s worth looking at any pending Reservations you currently have for older families, and not jumping to this prematurely – you may end up paying twice.

Talking of which, Reservations are available for t3a as well. Looking at the Sydney price for a nano, it drops from the 5.6c/hr to 4c/hr; across the fleet, discounts on reserved versus on-demand for the t3a are up to 63%

For those who don’t reserve – because you’re not ready to commit, perhaps? – then the simple change of family is an easy and low-risk way of reaping some savings. For example, a fleet of 100 small instances for a month on t2 swapped to t3a would reap a saving of US$2,172.48 – US$1,755.84 = US$416.64/month, or just shy of US$5,000 a year (AU$7,000).

YMMV, test your workload – and Availability Zones – for support of the t3a.

AWS Certification: Pearson VUE and PSI

With the announcement a few weeks back I thought I’d look back on where I can send my team to get certified. For the last few years, AWS Certification has only had their testing via PSI, and in Perth, that meant one venue, with two kiosks. Prior to that, there were more test centres (with Kryterion as the test provider, as per previous blog post in 2017).

But now Pearson VUE are in the mix along side PSI, and the expansion is great.

There are now an additional 6 locations to get certified in Western Australia, including the first one outside of Perth by some 300+ kms:

- North Metropolitan TAFE, 30 Aberdeen St, Northbridge

- DDLS Perth, 553 Hay Street

- ATI-Mirage, Cloisters 863 Hay Street

- Edith Cowan, Joondalup

- North Metro TAFE, 35 Kendrew Crescent, Joondalup

- Market Creations, 7 Chapman Road, Geraldton

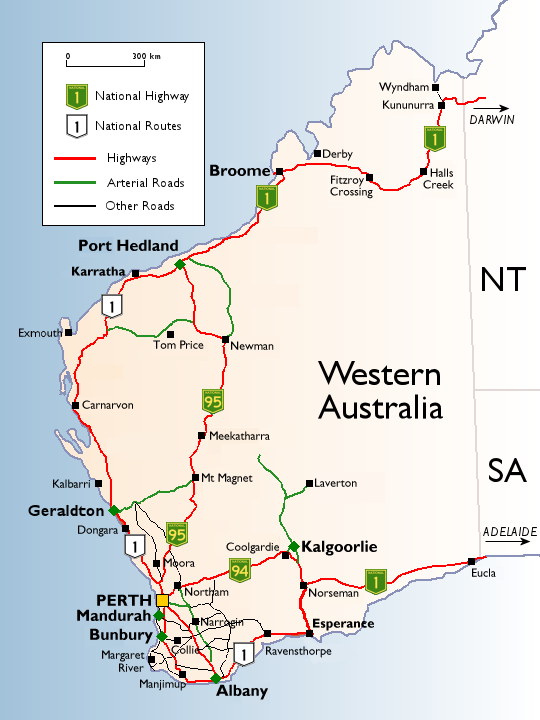

Geraldton is several hours drive north from Perth, at around 420kms (260mi), with a population around 40,000. The rest of Western Australia north of that is probably only another 60,000 people in total across Karratha (16k), Carnarvon, Exmouth, Port Headland, Dampier, and Exmouth.

Lets get some perspective on these distances, for my foreign friends:

By Fikri (talk) (Uploads) – Fikri (talk) (Uploads), CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=199809

For comparisons, check out this. Suffice to say, its a bloody long way. My wife lived for a while in Carnarvon, half way up the coast; that was around 10 hours driving to get there.

It would be interesting to see Busselton (pop 74k), and Albany, both to the south have some availability hereto help get people services without having to trek for days, or not bother at all.

S3 Public Access: Preventable SNAFUs

It’s happened again.

This time it is Facebook who left an Amazon S3 Bucket with publicly (anonymously) accessible data. 540 million breached records.

Previously, Verizon, PicketiNet, GoDaddy, Booz Allen Hamilton, Dow Jones, WWE, Time Warner, Pentagon, Accenture, and more. Large, presumably trusted names.

Let’s start with the truth: objects (files, data) uploaded to S3, with no options set on the bucket or object, are private by default.

Someone has to either set a Bucket Policy to make objects anonymously accessible, or set each object as Public ACL for objects to be shared.

Lets be clear.

These breaches are the result of someone uploading data and setting the acl:public-read, or editing a Bucket’s overriding resource policy to facilittate anonymous public access.

Having S3 accessible via authenticated http(s) is great. Having it available directly via anonymous http(s) is not, but historically that was a valid use case.

This week I have updated a client’s account, that serves a static web site hosted in S3, to have the master “Block Public Access” enabled on their entire AWS account. And I sleep easier. Their service experienced no downtime in the swap, no significant increase in cost, and the CloudFront caching CDN cannot be randomly side-stepped with requests to the S3 bucket.

Serving from S3 is terrible

So when you set an object public it can be fetched from S3 with no authentication. It can also be served over unencrypted HTTP (which is a terrible idea).

When hitting the S3 endpoint, the TLS certificate used matches the S3 endpoint hostname, which is something like s3.ap-southeast-2.amazonaws.com. Now that hostname probably has nothing to do with your business brand name, and something like files.mycompany.com may at least give some indication of affiliation of the data with your brand. But with the S3 endpoint, you have no choice.

Ignoring the unencrypted HTTP; the S3 endpoint TLS configuration for HTTPS is also rather loosely curated, as it is a public, shared endpoint with over a decade of backwards compatibility to deal with. TLS 1.0 is still enabled, which would be a breach of PCI DSS 3.2 (and TLS 1.1 is there too, which IMHO is next to useless).

Its worth noting that there are dual-stack IPv4 and IPv6 endpoints, such as s3.dualstack.ap-southeast-2.amazonaws.com.

So how can we fix this?

CloudFront + Origin Access Identity

CloudFront allows us to select a TLS policy, pre-defined by AWS, but permitting us to restrict available protocols and ciphers. This lets us remove “early crypto” and be TLS 1.2 only.

CloudFront also permits us to use a customer specific name, for SNI enabled clients for no additional cost, or a dedicated IP address (not worth it, IMHO).

Origin Access Identities give CloudFront a rolling API keypair that the service can use to access S3. Your S3 bucket then has a policy permitting this Identity access to the host.

With this access in place, you can then flick the “Block Public Access” setting account-wide, possibly on the bucket first, then the account-wide settings last.

One thing to work out is your use of URLs ending in “/”. Using Lambda@edge, we convert these to a request for “/index.html”. Similaly URL paths that end in “/foo” with no typical suffix get mapped to “/foo/index.html”.

Governance FTW?

So, have you checked if Block Public Access is enabled in your account(s). How about a sweep through right now?

If you’re not sure about this, contact me.