I’ve been talking for the last number of years about some of the web security changes that have happened in the web/browser/PKI landscape, many of which have happened quietly and those not paying attention may have missed.

One very interesting element has been a modern browser capability called Network Error Logging. Its significant as it fixes a problem in the web space where errors may happen on the client side, and the server side hears nothing about it.

You can read the NEL spec here.

Adopting NEL is another tool in your DevOps armoury, to drive operational excellence and continuous improvement of your deployed web applications, helping to retain customers and increase business value.

Essentially, this is a simple HTTP header string that can be set, and browsers that recognise it will cache that information for a period (that you specify). If the browser has any of a whole set of operational issues while using your web site, then it has an opportunity to submit a report to your error logging endpoint (or cache it to submit later).

Prior to this, you would have to rely on the generosity of users reporting issues for you. The chance of a good Samaritan (white hat) getting through a customer support line to report issues is.. small! Try calling your local grocery store and tell them they have a JavaScript error. Indeed, for this issue, we have RFC 9116 for security.txt.

Having your users report your bad news is kind of like this scene from the 1995 James Bond film GoldenEye:

So, what’s the use-case of Network Error Logging. I’ll split this into four scenarios:

- Developer environments

- Testing environments

- Non-production UAT environments

- Production Environments

Developer Environments

Developers love to code, and will produce vast amounts of code, and have it work in their own browser. They’ll also respond to error sin their own environments. But without the NEL error logs, they may miss the critical point a bug is at, when something fails to render in the browser.

NEL gives developers the visibility they are lacking when they hit issues, with otherwise, need screen captures from the browser session (which are still useful, particularly if your screen capture includes the complete time (including seconds) and the system is NTP synchronised).

With stricter requirements coming (such as Content Security Policies being mandated by the PCI DSS version 4 draft on payment processing pages), the sooner you can give developers the visibility of why operations have failed, the more likely of success when the software project makes it to a higher environment.

So, developers should have a non-production NEL endpoint, just to collect logs, so they can review and sort them, and affect change. Its not likely to be high volume reporting here – it’s just your own development team using it, and old reports are quickly worthless (apart from identifying regressions).

Testing Environments

Like developers, Test Engineers are trying to gather evidence of failures to feed into trouble ticketing systems. A NEL endpoint gives Testers this evidence. Again the volume reporting may be pretty low, but the value of the reporting will again help errors hitting higher environments.

Non-Production UAT Environments

This is your last chance to ensure that the next release into production is not hitting silly issues. The goal here to is make the volume of NEL reports approach zero, and any that come in are likely to be blockers. Depending on the size of your UAT validation, the volume will still be low.

Production Environments

This is where NEL becomes even more important. Your Security teams and your operational teams need to both have real-time visibility of reporting, as the evidence here could be indicative of active attempts to subvert the browser. Of course the volume of reports could also be much larger, so be prepared to trade the fraction of reporting to balance the volume of reports. It may also be worth using a commercial NEL endpoint provider for this environment.

Running your own NEL Endpoint

There is nothing stopping you from running your own NEL endpoint, and this is particularly useful in low volume, non-production scenarios. It’s relatively, simple, you just need to think of your roll out:

- Let every project define their own NEL endpoints, perhaps one per environment, and let them collect and process their own reports

- Provide a single central company-wide non-production NEL endpoint, but then give all developers access to the reports

- Somewhere in the middle of these above two options?

Of course, none of these are One Way Doors. You can always adjust your NEL endpoints, by just updating the NEL headers you have set on your applications. If you don’t know how to adjust HTTP header strings on your applications and set arbitrary values, then you already have a bigger issue in that you don’t know what you’re doing in IT, so please get out of the way and allow those who do know to get stuff done!

Your NEL endpoint should be defined as a HOSTNAME, with a valid (trusted) TLS certification, listening for an HTTP post over HTTPS. Its a simple JSON payload, that you will want to store somewhere. You have a few choices as to what to do with this data when submitted:

- Reject it outright. Maybe someone is probing you, or submitting malicious reports (malformed, buffer overflow attempt, SQL injection attempt, etc)? But these events themselves may be of interest…

- Store it:

- In a relational database

- In a No-SQL database

- In something like ElasticSearch

- Relay it:

- Via email to a Distribution List or alias

- Integration to another application or SIEM

My current preference is to use a No-SQL store, with a set retention period on each report.

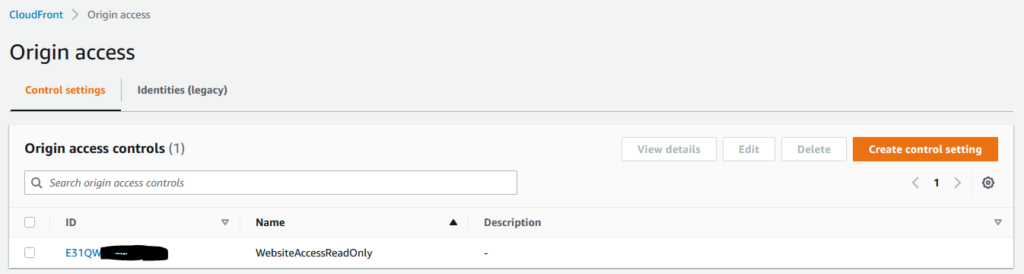

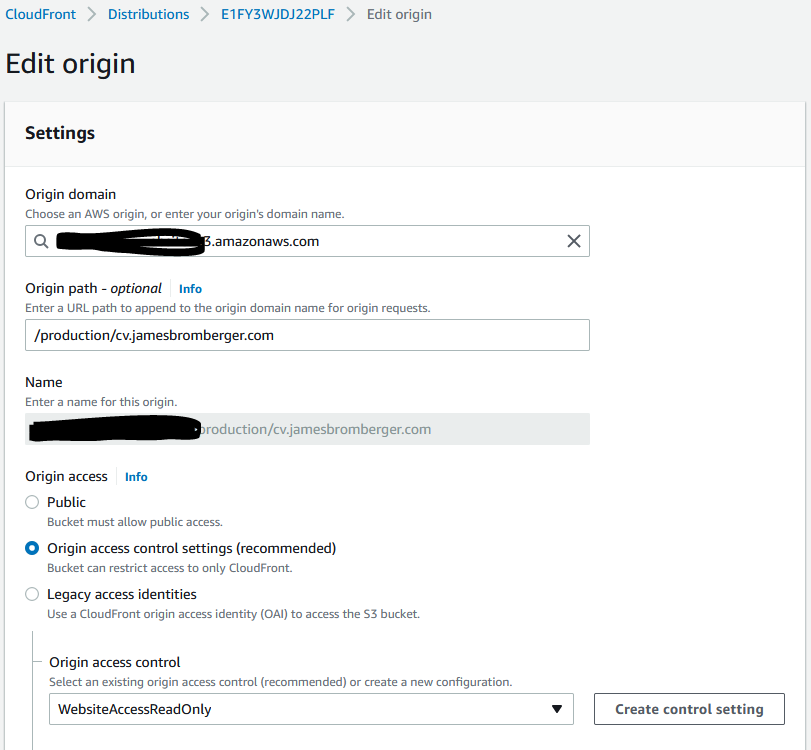

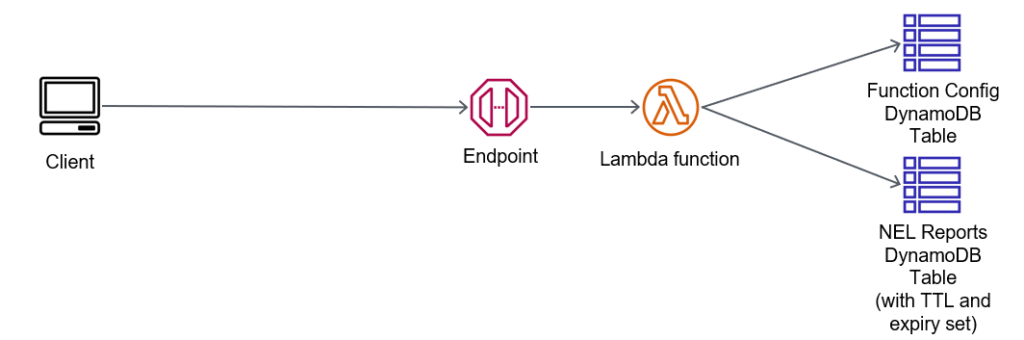

NEL in AWS API Gateway, Lambda and DynamoDB

The architecture for this is very simple:

We have one table which controls access for reports to be submitted; its got config items that are persistent. I like to have a config for banned IPs (so I can block malicious actors), and perhaps banned DNS domains in the NEL report content. Alternately, I may have an allow list of DNS domains (possibly with wildcards, such as *.example.com).

My Lambda function will get this content, and then evaluate the source IP address of the report, the target DNS domain in the report, and work out if its going to store it in the Reports table.

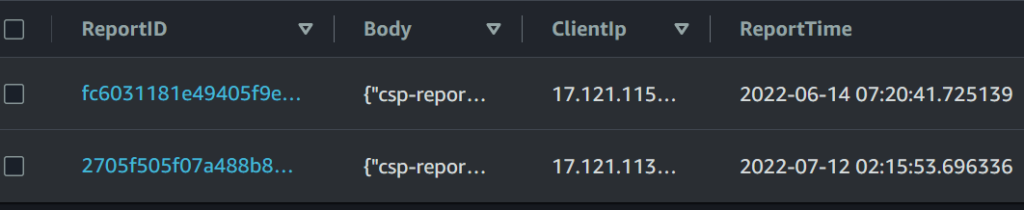

When inserting the JSON into the report table, I’m also going to record:

- The current time (UTC)

- The source address the report came from

Here’s an example report that’s been processed into the table:

This is what the NEL report (Body) looks like:

{

"csp-report":{

"document-uri":"https://www.example.com/misc/video.html",

"referrer":"",

"violated-directive":"default-src 'none'",

"effective-directive":"child-src",

"original-policy":"default-src 'none'; img-src 'self' data: https://docs.aws.amazon.com https://api.mapbox.com/ https://unpkg.com/leaflet@1.7.1/dist/images/ ; font-src data: ; script-src 'self' blob: https://cdnjs.cloudflare.com/ajax/libs/video.js/ https://ajax.googleapis.com https://cdnhs.cloudflare.com https://sdk.amazonaws.com/js/ https://player.live-video.net/1.4.1/amazon-ivs-player.min.js https://player.live-video.net/1.4.1/ https://unpkg.com/leaflet@1.7.1/dist/leaflet.js https://unpkg.com/leaflet.boatmarker/leaflet.boatmarker.min.js https://unpkg.com/leaflet.marker.slideto@0.2.0/ 'unsafe-inline'; style-src 'self' https://cdnjs.cloudflare.com/ajax/libs/video.js/ https://unpkg.com/leaflet@1.7.1/dist/leaflet.css ; frame-ancestors 'none'; form-action 'self'; media-src blog:; connect-src 'self' *.live-video.net wss://e2kww8wsne.execute-api.ap-southeast-2.amazonaws.com/production wss://boat-data.example.com ; object-src: self ; base-uri 'self'; report-to default; report-uri https://nel.example.com/",

"blocked-uri":"blob",

"status-code":0,

"source-file":"https://player.live-video.net",

"line-number":6,

"column-number":63072

}

}And here is the Lambda code that is validating the reports:

import json

import boto3

import ipaddress

import datetime

import uuid

def lambda_handler(event, context):

address = report_src_addr(event)

if address is not False:

if report_ip_banned(address) or not report_ip_permitted(address):

return {

'statusCode': 403,

'body': json.dumps({ "Status": "rejected", "Message": "Report was rejected from IP address {}".format(address)})

}

if not report_hostname_permitted(event):

return {

'statusCode': 403,

'body': json.dumps({ "Status": "rejected", "Message": "Reports for subject not allowed"})

}

report_uuid = save_report(event)

if not report_uuid:

return {

'statusCode': 403,

'body': json.dumps({ "Status": "rejected"})

}

return {

'statusCode': 200,

'body': json.dumps({ "Status": "accepted", "ReportID": report_uuid})

}

def save_report(event):

report_uuid = uuid.uuid4().hex

client_ip_str = str(report_src_addr(event))

print("Saving report {} for IP {}".format(report_uuid, client_ip_str))

response = report_table.put_item(

Item={

"ReportID": report_uuid,

"Body": event['body'],

"ReportTime": str(datetime.datetime.utcnow()),

"ClientIp": client_ip_str

}

)

if response['ResponseMetadata']['HTTPStatusCode'] is 200:

return report_uuid

return False

def report_ip_banned(address):

fe = Key('ConfigName').eq("BannedIPs")

response = config_table.scan(FilterExpression=fe)

if 'Items' not in response.keys():

print("No items in Banned IPs")

return False

if len(response['Items']) is not 1:

print("Found {} Items for BannedIPs in config".format(len(response['Items'])))

return False

if 'IPs' not in response['Items'][0].keys():

print("No IPs in first item")

return False

ip_networks = []

for banned in response['Items'][0]['IPs']:

try:

#print("Checking if we're in {}".format(banned))

ip_networks.append(ipaddress.ip_network(banned))

except Exception as e:

print("*** EXCEPTION")

print(e)

return False

for banned in ip_networks:

if address.version == banned.version:

if address in banned:

print("{} is banned (in {})".format(address, banned))

return True

#print("Address {} is not banned!".format(address))

return False

def report_ip_permitted(address):

fe = Key('ConfigName').eq("PermittedIPs")

response = config_table.scan(FilterExpression=fe)

if len(response['Items']) is 0:

return True

if len(response['Items']) is not 1:

print("Found {} Items for PermittedIPs in config".format(len(response['Items'])))

return False

if 'IPs' not in response['Items'][0].keys():

print("IPs not found in permitted list DDB response")

return False

ip_networks = []

for permitted in response['Items'][0]['IPs']:

try:

ip_networks.append(ipaddress.ip_network(permitted, strict=False))

except Exception as e:

print("permit: *** EXCEPTION")

print(e)

return False

for permitted in ip_networks:

if address.version == permitted.version:

if address in permitted:

print("permit: Address {} is permitted".format(address))

return True

print("Address {} not permitted?".format(address))

return False

def report_hostname_permitted(event):

if 'body' not in event.keys():

print("No body")

return False

if 'httpMethod' not in event.keys():

print("No method")

return False

elif event['httpMethod'].lower() != 'post':

print("Method is {}".format(event['httpMethod']))

return False

if len(event['body']) > 1024 * 100:

print("Body too large")

return False

try:

reports = json.loads(event['body'])

except Exception as e:

print(e)

return False

for report in reports:

print(report)

return True

if 'url' not in report.keys():

return False

url = urlparse(report['url'])

fe = Key('ConfigName').eq("BannedServerHostnames")

response = config_table.scan(FilterExpression=fe)

if len(response['Items']) == 0:

print("No BannedServerHostnames")

return True

for item in response['Items']:

if 'Hostname'not in item.keys():

continue

for exxpression in item['Hostname']:

match = re.search(expression + "$", url.netloc)

if match:

print("Rejecting {} as it matched on {}".format(url.netloc, expression))

return False

return True

def report_src_addr(event):

try:

addr = ipaddress.ip_address(event['requestContext']['identity']['sourceIp'])

except Exception as e:

print(e)

return False

#print("Address is {}".format(addr))

return addr

def parse_X_Forwarded_For(event):

if 'headers' not in event.keys():

return False

if 'X-Forwarded-For' not in event['headers'].keys():

return False

address_strings = [x.strip() for x in event['headers']['X-Forwarded-For'].split(',')]

addresses = []

for address in address_strings:

try:

new_addr = ipaddress.ip_address(address)

if new_addr.is_loopback or new_addr.is_private:

print("X-Forwarded-For {} is loopback/private".format(new_addr))

else:

addresses.append(new_addr)

except Exception as e:

print(e)

return False

return addressesYou’ll note that I have a limit on the size of a NEL report – 100KB of JSON is more than enough. I’m also handling CIDR notation for blocking (eg, 130.0.0.0/16).

Operational Focus

Clearly to use this, you’ll want to push the Lambda function into a repeatable template, along with the API Gateway and DynamoDB table.

You may also want to have a Time To Live (TTL) on the Item being submitted in save_report() function, with perhaps the current time (Unix time) plus a number of seconds to retain (perhaps a month), and configure TTL expiry on the DynamoDB table.

You may also want to generate some custom CloudWatch metrics, based upon the data being submitted; perhaps per hostname or environment, to get metrics on the rate of errors being reported.

Summary

Hopefully the above is enough to get you to understand NEL, and help capture these reports from your web clients; in a production environment you may want to look at report-uri.com, in non-production, you may want to roll your own as above.