There are a large number of workloads that operate in the AWS Cloud using traditional virtual machines (Instances) on traditional IPv4 networking. And for the last few years, we’ve seen the steady growth in IPv6 adoption globally. For those who haven’t started this journey yet, here’s some notes on what you may want to look at as you start to embrace the future of the Internet.

It should be noted that this transition is a two way street:

- you need to get ready to offer your digital services to your clients over both IPv4 and IPv4 (Dual Stack)

- you need to have your dependant services you use to offer (listen) on an IPv6 address, and probably via a gradual transition via offering both IPv4 and IPv6 for a (long) period of time

Within your internal (to your VPC) network architecture you can use either network protocol: the initial focus needs to be on enabling your incoming traffic to use either IPv4 or IPv6.

Your transport layer security (TLS) should be identical on either network protocol. The IP protocol is just a transport protocol.

Here are the steps:

- VPC Changes

- Subnet Changes

- Load Balancers Changes

- Routing Changes

- Security Group Changes

- DNS Changes

VPC Configuration

Adding an IPv6 address block is reasonably simple in VPC. While you can allocate from your own assigned pool, its far easier to use the AWS pool; its ready to go and doesn’t need any other preparation.

There are three ways to add an IPv6 address allocation:

- In the console, via ClickOps

- Via the API (including the CLI)

- Via the CloudFomation template that defines your VPC – highly recommended

Assigning the address block to the VPC does not actually use it, and should make zero impact to already running workloads. You should be safe to apply this at any time.

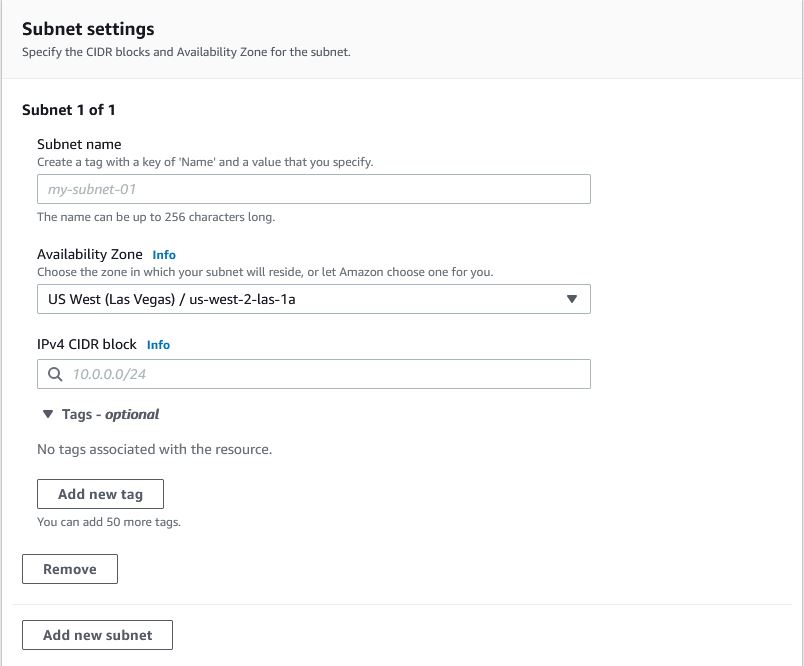

Subnet Configuration

Once the VPC has an allocation, we can then update existing subnets to also include an allocation from within the VPC’s range. The key difference we see here is that in IPv4 we can chose the size of the subnet, in IPv6 you cannot: every IPv6 allocation to a subnet is a /64, which is about 18 billion billion IP addresses.

You can undo an allocation if no Network interfaces (ENIs) are present in the subnet using those addresses.

The configuration is relativity simple: you get to those which slice of the VPC IPv6 address block will be used for which subnet. I follow a pretty simple rule: I anticipate that my VPCs will perhaps one day spread across 4 Availability Zones, so I allocate subnets sequentially across Availability zones in order to be able to reference the range via a supernet.

The reason for this is:

- subnetting is done in powers of two: so for continuous addressing (supernetting) we’re looking at using two AZs, four AZs, or eight AZs, etc.

- two availability zones is insufficient. If one fails, then I you are running on a single Availability Zone during the incident (which may last several hours). This AZ may be constrained in capacity, while other AZs may be underutilised. Hence we want to use three AZs to have fault tolerance able to be restored DURING a single AZ outage

Most Regions have between three and 5 AZs. Preparing for 8 in most Regions will be reserving address space we’ll likely never be allocating.

Hence, starting with public subnets, we want to sequentially allocate them with space to accommodate four AZs. These allocations are a hexadecimal number between 00 and FF – and hence a 256 limit on the total number of subnets. If we recall the four AZ allocation, then that’s 64 sets of Subnets across all AZs.

Again, you can allocate these by:

- Click Ops in the console on each existing subnet (or when creating new subnets)

- API call (including the CLI)

- CloudFormation template – recommended – in which case, look at the Fn::Cidr to calculate the allocation. Check out my post form March 2018 on this.

If your focus is to start with your services being dual-stack available, then the only subnets you need to allocate initially are the Public Subnets: the subnets where your client facing (internet facing) load balancers are.

Once again, there’s no interruption to existing traffic during this change; indeed you’re less than half way through the required changes.

You may also allocate the rest of your private subnets at this time if you wish.

Routing Changes

For public subnets to function, they need a route for the default IPv6 address via the existing Internet Gateway (IGW). This looks like “::/0”, and when pointing to the IGW, it permits two way traffic just like IPv4. Your set of public subnets will need this route, and this can be done at any time: permitting IPv6 routing wont start clients using it.

If you have private subnets with IPv6 allocations, and you want them to be able to make outbound requests on IPv6 to the Internet, then you may want to consider an Egress Only IGW as the destination for “::/0” for private subnets. Note your public subnets still will use the standard IGW.

The Egress only IGW resource does what it says, and supplants the need for NAT Gateway as used in IPv4 (more on NAT GW later).

Again, you can add the Egress Only IGW and the Routing changes in several ways:

- Click Ops on the console

- Via the API (including the CLI)

- In your CloudFormation template for your VPC – recommended

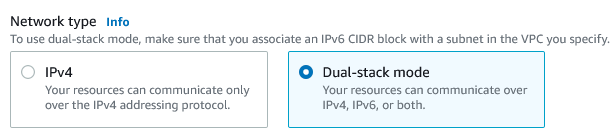

Load Balancer Changes

Now you have public load balancers in public subnets that have IPv6 available, you can modify your load balancer to have it get an IPv6 address. This is yet another action that will have no impact on current traffic.

You can modify the existing load balancers by:

- Click ops on the console

- An API call (including the CLI)

- In your CloudFormation template for your Workload – recommended

Security Group Changes

Now we’re down the the last two items. By default, your security group is closed unless you have made changes. Your typical load balancer will be listening on TCP 80 and/or 443 for web traffic, and be open to the entire [IPv4] Internet with a source of 0.0.0.0/0.

To enable this security group for IPv6, we add a set of rules for source of ::/0 for the same ports you have for IPv4 (typically 80 and 443 for web traffic, different for other protocols).

Its at this time you can now test connectivity to your load balancer using IPv6 end-to-end – assuming you have another end on the IPv6 Internet somewhere.

If your workstation/cellphone is using IPv6, then you could browse to IPv6 address – but you’ll probably get a certificate warning as the name in the certificate doesn’t match the raw IP address.

If you’re not familiar yet, this should also be a CloudFormation template update.

DNS Changes

This is when we announce to the world that your service can be accessed with IPv6. You want to make sure you have done the above test to ensure you can connect, as this is the final piece in the puzzle.

Typically a custom DNS name for a load balancer is a Route53 ALIAS record of type A (Address). The customer DNS name is what also appears in any TLS Certificates.

To finally flick the switch on IPv6, you add an additional Route53 ALIAS record of type AAAA (four As), with the destination being the same as you have used for the existing Alias A record (one A).

You should now be able to check that you can resolve your service using the dnslookup utility. From a command prompt or Powershell, type:

nslookup -type AAAA my.custom.load.balancer.namenslookup -type A my.custom.load.balancer.name

Your Dependencies

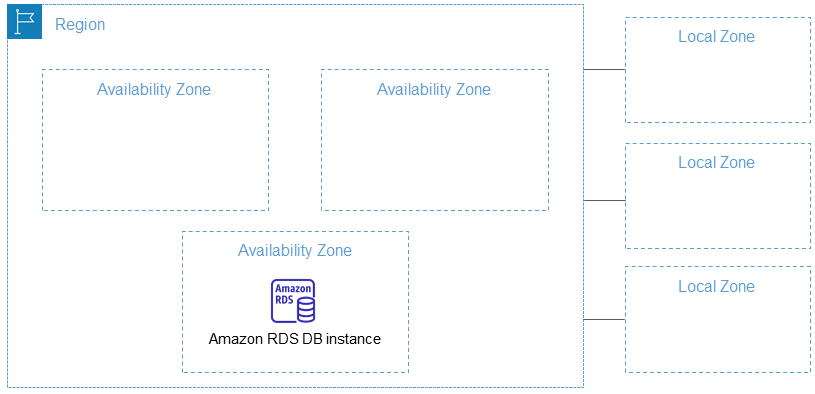

Now you’re up and running, you need to think about the services you depend upon. Services within your VPC, such as RDS, require AWS to enable these to be dual stack. Some services already are, such as the Link-Local MetaData service, Time Sync Service and VPC DNS resolver (note: always use the DNS resolver).

Some services will be outside of your VPC but still AWS-run, like SQS, and S3: in which case, look to use VPC Endpoints to communicate with them.

But other third party resources across the Internet may be stack back on IPv4. if you have an EC2 Linux Instance then its sometimes worth running a TCPDUMP to inspect the traffic you see using IPv4. A command like tcpdump ip and port not 22 may be useful. You can extend that to also exclude HTTP/HTTPS traffic with tcpdump ip and port not 22 and port not 80 and port not 443. Remember, your service port on your instance may be a different number on the inside of your network.

You’ll need to ask your dependencies to include dual-stack support on their services. In the mean time, you’ll be having to fall back to using IPv4 from your network to communicate with these dependencies. There’s two ways this can happen:

- If the subnet with your EC2 instance in it is dual-stack, hen the host can use an IPv4 connection itself, possibly via a NAT Gateway to communicate with the external IPv4 dependency

- If the subnet with your EC2 instance is IPv6 only (which is rather new), then the subnet can be configured to use DNS64 addressing (a subnet level configuration), and can route its traffic via the NAT GW, which will translate from IPv6 on the VPC-internal network, to IPv4 across the Internet (and back). See this.

Moving to IPv6 only internal networks is a long term goal, probably in the order of half a decade or so. A number of additional AWS updates will be needed before this becomes a default.

Additional IPv6 Notes in AWS

In this transition period (which has been going for nearly 25 years), you’re going to find stuff that silently falls back to IPv4. With host able to simultaneously have two addresses (IPv4 A, and IPv6 AAAA), then things that look them up can have a choice. For more things this is the newer AAAA, with a fall-back to A if needed (see the Happy Eyeballs RFC).

However, at this time (Mar 2022), CloudFront still preferences IPv4 origins when the origin is dual-stack. CloudFront also still uses TLS 1.2 instead of the newer and faster TLS 1.3, and HTTP/1.1 instead of the slightly more efficient HTTP/2 request protocol.

AWS IoT core exposes IPv4 endpoints, which is unusual as a key element of IoT is having millions of devices connected, a situation best served by IPv6.

Similar considerations exist for Route53 Health Checks, and others.

Summary

If you’re thinking this is all very new in cloud, you’d be mistaken. I was transitioning customer environments (including production) in AWS to dual stack in 2018 – four years ago. I’ve been dual-stack for my home Internet connection since I swapped to Aussie Broadband (I churned away from iiNet, who once had an IPv6 blog and strong implementation plans).

For several years, Australia’s dominant telco, Telstra, has had IPv6 dual stack for its consumer mobile broadband, something that the other players like Optus are yet to enable.

But these changes are inevitable.

The future is here, its just not evenly distributed.